Linear operators in calculus | Rates | Differential operators | Vector calculus | Differential calculus | Generalizations of the derivative

Gradient

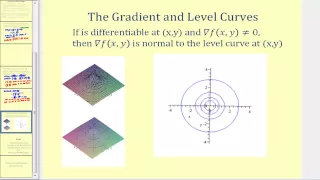

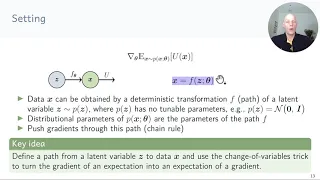

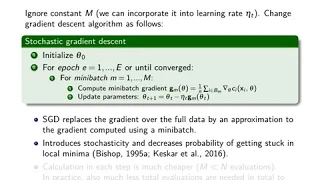

In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field (or vector-valued function) whose value at a point is the "direction and rate of fastest increase". If the gradient of a function is non-zero at a point p, the direction of the gradient is the direction in which the function increases most quickly from p, and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to maximize a function by gradient ascent. In coordinate-free terms, the gradient of a function may be defined by: where df is the total infinitesimal change in f for an infinitesimal displacement , and is seen to be maximal when is in the direction of the gradient . The nabla symbol , written as an upside-down triangle and pronounced "del", denotes the vector differential operator. When a coordinate system is used in which the basis vectors are not functions of position, the gradient is given by the vector whose components are the partial derivatives of at . That is, for , its gradient is defined at the point in n-dimensional space as the vector The gradient is dual to the total derivative : the value of the gradient at a point is a tangent vector – a vector at each point; while the value of the derivative at a point is a cotangent vector – a linear functional on vectors. They are related in that the dot product of the gradient of f at a point p with another tangent vector v equals the directional derivative of f at p of the function along v; that is, . The gradient admits multiple generalizations to more general functions on manifolds; see . (Wikipedia).