Word embeddings are one of the coolest things you can do with Machine Learning right now. Try the web app: https://embeddings.macheads101.com Word2vec paper: https://arxiv.org/abs/1301.3781 GloVe paper: https://nlp.stanford.edu/pubs/glove.pdf GloVe webpage: https://nlp.stanford.edu/proje

From playlist Machine Learning

Graph Neural Networks, Session 6: DeepWalk and Node2Vec

What are Node Embeddings Overview of DeepWalk Overview of Node2vec

From playlist Graph Neural Networks (Hands-on)

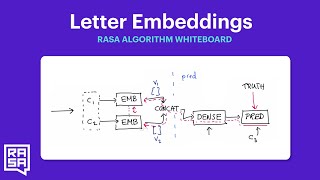

Rasa Algorithm Whiteboard - Understanding Word Embeddings 1: Just Letters

We're making a few videos that highlight word embeddings. Before training word embeddings we figured it might help the intuition if we first trained some letter embeddings. It might suprise you but the idea with an embedding can also be demonstrated with letters as opposed to words. We're

From playlist Algorithm Whiteboard

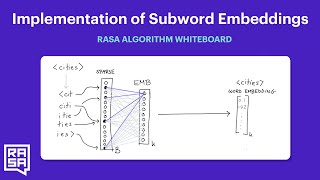

Rasa Algorithm Whiteboard - Implementation of Subword Embeddings

In this video we highlight how you might construct a neural network that can handle subword embeddings.

From playlist Algorithm Whiteboard

Jasna Urbančič (11/03/21):Optimizing Embedding using Persistence

Title: Optimizing Embedding using Persistence Abstract: We look to optimize Takens-type embeddings of a time series using persistent (co)homology. Such an embedding carries information about the topology and geometry of the dynamics of the time series. Assuming that the input time series

From playlist AATRN 2021

PowerPoint Quick Tip: Embed Fonts Within a File

In this video, you’ll learn more about embedding fonts within a file. Visit https://www.gcflearnfree.org/powerpoint-tips/embed-fonts-within-a-file/1/ to learn even more. We hope you enjoy!

From playlist Microsoft PowerPoint

How to Embed a Twitter Feed to a Google Sites Page

How to embed a Twitter feed (widget) directly into a Google Site page. Duane Habecker: https://about.me/duanehabecker Twitter: @dhabecker

From playlist EdTech Stuff

Understanding the Origins of Bias in Word Embeddings

Toronto Deep Learning Series Author Speaking For more details, visit https://tdls.a-i.science/events/2019-03-18/ Speaker: Marc Etienne Brunet (author) Facilitator: Waseem Gharbieh Abstract: The power of machine learning systems not only promises great technical progress, but risks soc

From playlist Natural Language Processing

NJIT Data Science Seminar: Steven Skiena, Stony Brook University

NJIT Institute for Data Science https://datascience.njit.edu/ Word and Graph Embeddings for Machine Learning Steven Skiena, Ph.D. Distinguished Professor Department of Computer Science Stony Brook University Distributed word embeddings (word2vec) provides a powerful way to reduce large

From playlist Talks

Paper Read Aloud: Interactive Refinement of Cross-Lingual Word Embeddings

An experiment! I recorded this a while ago but didn't post it until now because ... 2020. A long time ago, a blind student once asked me to record myself reading my papers when he found that I do that anyway during my editing process, so I finally did it. This is an experiment, feedback

From playlist Papers Read Aloud

CS224W: Machine Learning with Graphs | 2021 | Lecture 3.1 - Node Embeddings

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3Cv1BEU Jure Leskovec Computer Science, PhD From previous lectures we see how we can use machine learning with feature engineering to make predictions on nodes, li

From playlist Stanford CS224W: Machine Learning with Graphs

On embeddings of manifolds - Dishant Mayurbhai Pancholi

Seminar in Analysis and Geometry Topic: On embeddings of manifolds Speaker: Dishant Mayurbhai Pancholi Affiliation: Chennai Mathematical Institute; von Neumann Fellow, School of Mathematics Date: October 26, 2021 We will discuss embeddings of manifolds with a view towards applications i

From playlist Mathematics

Adding vs. concatenating positional embeddings & Learned positional encodings

When to add and when to concatenate positional embeddings? What are arguments for learning positional encodings? When to hand-craft them? Ms. Coffee Bean’s answers these questions in this video. Outline: 00:00 Concatenated vs. added positional embeddings 04:49 Learned positional embedding

From playlist The Transformer explained by Ms. Coffee Bean

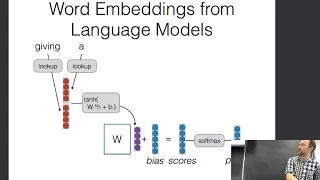

CMU Neural Nets for NLP 2017 (3): Models of Words

This lecture (by Graham Neubig) for CMU CS 11-747, Neural Networks for NLP (Fall 2017) covers: * Describing a word by the company that it keeps * Counting and predicting * Skip-grams and CBOW * Evaluating/Visualizing Word Vectors * Advanced Methods for Word Vectors Slides: http://phontron

From playlist CMU Neural Nets for NLP 2017

Learn low-dim Embeddings that encode GRAPH structure (data) : "Representation Learning" /arXiv

Optimize your complex Graph Data before applying Neural Network predictions. Automatically learn to encode graph structure into low-dimensional embeddings, using techniques based on deep learning and nonlinear dimensionality reduction. An encoder-decoder perspective, random walk approach

From playlist Learn Graph Neural Networks: code, examples and theory

How to Embed a Twitter Feed to a Blogger Post

How to embed a Twitter feed (widget) directly into a Blogger post. Duane Habecker: https://about.me/duanehabecker Twitter: @dhabecker

From playlist EdTech Stuff

Kaggle Reading Group: Universal Sentence Encoder (Part 2) | Kaggle

Join Kaggle Data Scientist Rachael as she reads through paper "Universal Sentence Encoder" by Cer et al. (Unpublished.) Link to paper: https://arxiv.org/pdf/1803.11175.pdf Repo with code: https://github.com/tensorflow/tfjs-models/tree/master/universal-sentence-encoder SUBSCRIBE: https:

From playlist Kaggle Reading Group | Kaggle