What are bounded functions and how do you determine the boundness

👉 Learn about the characteristics of a function. Given a function, we can determine the characteristics of the function's graph. We can determine the end behavior of the graph of the function (rises or falls left and rises or falls right). We can determine the number of zeros of the functi

From playlist Characteristics of Functions

How to evaluate the limit of a function by observing its graph

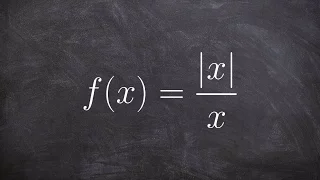

👉 Learn how to evaluate the limit of an absolute value function. The limit of a function as the input variable of the function tends to a number/value is the number/value which the function approaches at that time. The absolute value function is a function which only takes the positive val

From playlist Evaluate Limits of Absolute Value

When is a function bounded below?

👉 Learn about the characteristics of a function. Given a function, we can determine the characteristics of the function's graph. We can determine the end behavior of the graph of the function (rises or falls left and rises or falls right). We can determine the number of zeros of the functi

From playlist Characteristics of Functions

Determining the extrema as well as zeros of a polynomial based on the graph

👉 Learn how to determine the extrema from a graph. The extrema of a function are the critical points or the turning points of the function. They are the points where the graph changes from increasing to decreasing or vice versa. They are the points where the graph turnes. The points where

From playlist Characteristics of Functions

Evaluate the limit for a value of a function

👉 Learn how to evaluate the limit of an absolute value function. The limit of a function as the input variable of the function tends to a number/value is the number/value which the function approaches at that time. The absolute value function is a function which only takes the positive val

From playlist Evaluate Limits of Absolute Value

How to determine the extrema and zeros from the graph of a polynomial

👉 Learn how to determine the extrema from a graph. The extrema of a function are the critical points or the turning points of the function. They are the points where the graph changes from increasing to decreasing or vice versa. They are the points where the graph turnes. The points where

From playlist Characteristics of Functions

Extrema of a function from a graph

👉 Learn how to determine the extrema from a graph. The extrema of a function are the critical points or the turning points of the function. They are the points where the graph changes from increasing to decreasing or vice versa. They are the points where the graph turnes. The points where

From playlist Characteristics of Functions

Learn to evaluate the limit of the absolute value function

👉 Learn how to evaluate the limit of an absolute value function. The limit of a function as the input variable of the function tends to a number/value is the number/value which the function approaches at that time. The absolute value function is a function which only takes the positive val

From playlist Evaluate Limits of Absolute Value

Using parent graphs to understand the left and right hand limits

👉 Learn how to evaluate the limit of an absolute value function. The limit of a function as the input variable of the function tends to a number/value is the number/value which the function approaches at that time. The absolute value function is a function which only takes the positive val

From playlist Evaluate Limits of Absolute Value

Loss Functions : Data Science Basics

What are loss functions in the context of machine learning?

From playlist Data Science Basics

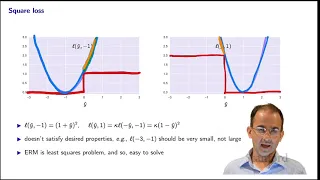

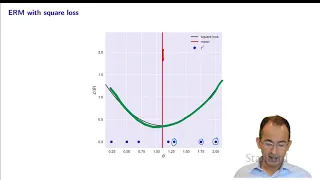

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 14 - Boolean classification

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Lecture 3 | Loss Functions and Optimization

Lecture 3 continues our discussion of linear classifiers. We introduce the idea of a loss function to quantify our unhappiness with a model’s predictions, and discuss two commonly used loss functions for image classification: the multiclass SVM loss and the multinomial logistic regression

From playlist Lecture Collection | Convolutional Neural Networks for Visual Recognition (Spring 2017)

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 15 - multiclass classification

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Cynthia Dwork - The Multi-X Framework Pt. 3/4 - IPAM at UCLA

Recorded 13 July 2022. Cynthia Dwork of Harvard University SEAS presents "The Multi-X Framework" at IPAM's Graduate Summer School on Algorithmic Fairness. Abstract: A third general notion of fairness lies between the individual and group notions. We call this “multi-X,” where “multi” refer

From playlist 2022 Graduate Summer School on Algorithmic Fairness

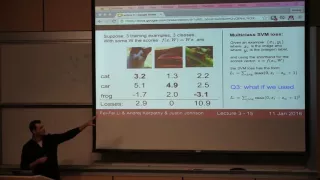

CS231n Lecture 3 - Linear Classification 2, Optimization

Linear classification II Higher-level representations, image features Optimization, stochastic gradient descent

From playlist CS231N - Convolutional Neural Networks

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 7 - constant predictors

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Many animations used in this video came from Jonathan Barron [1, 2]. Give this researcher a like for his hard work! SUBSCRIBE FOR MORE CONTENT! RESEOURCES [1] Paper on adaptive loss function: https://arxiv.org/abs/1701.03077 [2] CVPR paper presentation: https://www.youtube.com/watch?v=Bm

From playlist Deep Learning 101

Backpropagation explained | Part 3 - Mathematical observations

We have focused on the mathematical notation and definitions that we would be using going forward to show how backpropagation mathematically works to calculate the gradient of the loss function. We'll start making use of what we learned and applying it in this video, so it's crucial that y

From playlist Deep Learning Fundamentals - Intro to Neural Networks

Multi-group fairness, loss minimization and indistinguishability - Parikshit Gopalan

Computer Science/Discrete Mathematics Seminar II Topic: Multi-group fairness, loss minimization and indistinguishability Speaker: Parikshit Gopalan Affiliation: VMware Research Date: April 12, 2022 Training a predictor to minimize a loss function fixed in advance is the dominant paradigm

From playlist Mathematics

Learn how to evaluate left and right hand limits of a function

👉 Learn how to evaluate the limit of an absolute value function. The limit of a function as the input variable of the function tends to a number/value is the number/value which the function approaches at that time. The absolute value function is a function which only takes the positive val

From playlist Evaluate Limits of Absolute Value