Stochastic Gradient Descent, Clearly Explained!!!

Even though Stochastic Gradient Descent sounds fancy, it is just a simple addition to "regular" Gradient Descent. This video sets up the problem that Stochastic Gradient Descent solves and then shows how it does it. Along the way, we discuss situations where Stochastic Gradient Descent is

From playlist StatQuest

STRATIFIED, SYSTEMATIC, and CLUSTER Random Sampling (12-4)

To create a Stratified Random Sample, divide the population into smaller subgroups called strata, then use random sampling within each stratum. Strata are formed based on members’ shared (qualitative) characteristics or attributes. Stratification can be proportionate to the population size

From playlist Sampling Distributions in Statistics (WK 12 - QBA 237)

Mini Batch Gradient Descent | Deep Learning | with Stochastic Gradient Descent

Mini Batch Gradient Descent is an algorithm that helps to speed up learning while dealing with a large dataset. Instead of updating the weight parameters after assessing the entire dataset, Mini Batch Gradient Descent updates weight parameters after assessing the small batch of the datase

From playlist Optimizers in Machine Learning

Stochasticity (3), selection versus drift.

This video looks at how the forces of genetic drift and selection work in concert with one another. In particular, it looks at when the forces of selection will be more important than drift in determining the fate of new advantageous or deleterious mutant alleles. The main result is that s

From playlist TAMU: Bio 312 - Evolution | CosmoLearning Biology

Prob & Stats - Markov Chains (8 of 38) What is a Stochastic Matrix?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a stochastic matrix. Next video in the Markov Chains series: http://youtu.be/YMUwWV1IGdk

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Paolo Guasoni, Lesson I - 18 december 2017

QUANTITATIVE FINANCE SEMINARS @ SNS PROF. PAOLO GUASONI TOPICS IN PORTFOLIO CHOICE

From playlist Quantitative Finance Seminar @ SNS

Silvia Villa - Generalization properties of multiple passes stochastic gradient method

The stochastic gradient method has become an algorithm of choice in machine learning, because of its simplicity and small computational cost, especially when dealing with big data sets. Despite its widespread use, the generalization properties of the variants of stochastic

From playlist Schlumberger workshop - Computational and statistical trade-offs in learning

A friendly introduction to deep reinforcement learning, Q-networks and policy gradients

A video about reinforcement learning, Q-networks, and policy gradients, explained in a friendly tone with examples and figures. Introduction to neural networks: https://www.youtube.com/watch?v=BR9h47Jtqyw Introduction: (0:00) Markov decision processes (MDP): (1:09) Rewards: (5:39) Discou

From playlist Introduction to Deep Learning

Fin Math L11: Numeraire, T-forward measure and interest rates

Welcome to Financial Mathematics. In this lesson we finally enter into the modeling of interest rates, which we will no longer consider constant. This implies that many things we have said until now need to be updated and generalised in order to still hold. The new chapter is available he

From playlist Financial Mathematics

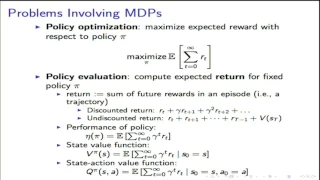

Stanford CS234: Reinforcement Learning | Winter 2019 | Lecture 2 - Given a Model of the World

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Professor Emma Brunskill, Stanford University https://stanford.io/3eJW8yT Professor Emma Brunskill Assistant Professor, Computer Science Stanford AI for Human

From playlist Stanford CS234: Reinforcement Learning | Winter 2019

See also https://youtu.be/W2pSn_t0KYs and https://youtu.be/x7QYZ4n3A8M

From playlist gradient_descent

A friendly introduction to deep reinforcement learning

Check out Luis Serrano's book 📖 Grokking Machine Learning | http://mng.bz/1reV 📖 To save 40% off this book ⭐ DISCOUNT CODE: twitserr40 ⭐ Luis explains how deep reinforcement learning works, focusing on Q-networks and policy gradients, over a simple example. Previous knowledge of machine

From playlist ML Talks by Luis Serrano

A Friendly Introduction To Deep Reinforcement Learning

In Grokking Machine Learning, expert machine learning engineer Luis Serrano introduces the most valuable ML techniques and teaches you how to make them work for you. You’ll only need high school math to dive into popular approaches and algorithms. Practical examples illustrate each new con

From playlist Machine Learning

Stochastic Approximation-based algorithms, when the Monte (...) - Fort - Workshop 2 - CEB T1 2019

Gersende Fort (CNRS, Univ. Toulouse) / 13.03.2019 Stochastic Approximation-based algorithms, when the Monte Carlo bias does not vanish. Stochastic Approximation algorithms, whose stochastic gradient descent methods with decreasing stepsize are an example, are iterative methods to comput

From playlist 2019 - T1 - The Mathematics of Imaging

From playlist CS294-112 Deep Reinforcement Learning Sp17

Johannes Rauh : Geometry of policy improvment

Recording during the thematic meeting : "Geometrical and Topological Structures of Information" the September 01, 2017 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent

From playlist Geometry

From playlist CS294-112 Deep Reinforcement Learning Sp17

Lecture 19 | MIT 6.832 Underactuated Robotics, Spring 2009

Lecture 19: Temporal difference learning Instructor: Russell Tedrake See the complete course at: http://ocw.mit.edu/6-832s09 License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 6.832 Underactuated Robotics, Spring 2009