The matrix approach to systems of linear equations | Linear Algebra MATH1141 | N J Wildberger

We summarize the matrix approach to solving systems of linear equations involving augmented matrices and row reduction. We also study the consequences of linearity of themultiplication of a matrix and vector. ************************ Screenshot PDFs for my videos are available at the webs

From playlist Higher Linear Algebra

(3.2.4A) Solving a System of Linear Equations Using an Augmented Matrix

This lesson explains how to solve a system of equations using an augmented matrix. https://mathispower4u.com

From playlist Differential Equations: Complete Set of Course Videos

Solution of Systems of Equations using Augmented Matrices -- 2x2

This lesson from the HSC Algebra 2/Trigonometry course introduces augmented matrices as a method for solving systems of equations.

From playlist Augmented Matrix Solution of Systems of Equations

Introduction to Augmented Matrices

This video introduces augmented matrices for the purpose of solving systems of equations. It also introduces row echelon and reduced row echelon form. http://mathispower4u.yolasite.com/ http://mathispower4u.wordpress.com/

From playlist Augmented Matrices

Ex 2: Solve a System of Two Equations Using an Augmented Matrix (Reduced Row Echelon Form)

This video explains how to solve a system of equations by writing an augmented matrix in reduced row echelon form. This example has no solution. Site: http://mathispower4u.com

From playlist Augmented Matrices

Determining Inverse Matrices Using Augmented Matrices

This video explains how to determine the inverse of a matrix using augmented matrices. http://mathispower4u.yolasite.com/ http://mathispower4u.wordpress.com/

From playlist Inverse Matrices

Augmented Matrices: Solve a 3 by 5 Linear System

This video explains how to solve a system of 3 equations with 5 unknowns using an augmented matrix.

From playlist Augmented Matrices

Stanford ENGR108: Introduction to Applied Linear Algebra | 2020 | Lecture 54-VMLS aug Lagragian mthd

Professor Stephen Boyd Samsung Professor in the School of Engineering Director of the Information Systems Laboratory To follow along with the course schedule and syllabus, visit: https://web.stanford.edu/class/engr108/ To view all online courses and programs offered by Stanford, visit:

From playlist Stanford ENGR108: Introduction to Applied Linear Algebra —Vectors, Matrices, and Least Squares

Ex 1: Solve a System of Two Equations Using an Augmented Matrix (Reduced Row Echelon Form)

This video explains how to solve a system of equations by writing an augmented matrix in reduced row echelon form. This example has one solution. Site: http://mathispower4u.com

From playlist Augmented Matrices

Fabian Faulstich - pure state v-representability of density matrix embedding - augmented lagrangian

Recorded 31 March 2022. Fabian Faulstich of the University of California, Berkeley, Mathematics, presents "On the pure state v-representability of density matrix embedding theory—an augmented lagrangian approach" at IPAM's Multiscale Approaches in Quantum Mechanics Workshop. Abstract: Dens

From playlist 2022 Multiscale Approaches in Quantum Mechanics Workshop

Quantitative Legendrian geometry - Michael Sullivan

Joint IAS/Princeton/Montreal/Paris/Tel-Aviv Symplectic Geometry Zoominar Topic: Quantitative Legendrian geometry Speaker: Michael Sullivan Affiliation: University of Massachusetts, Amherst Date: January 14, 2022 I will discuss some quantitative aspects for Legendrians in a (more or less

From playlist Mathematics

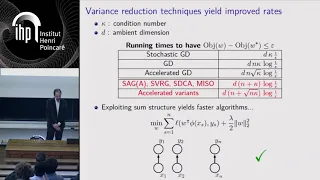

Stephen Wright: "Sparse and Regularized Optimization, Pt. 2"

Graduate Summer School 2012: Deep Learning, Feature Learning "Sparse and Regularized Optimization, Pt. 2" Stephen Wright, University of Wisconsin-Madison Institute for Pure and Applied Mathematics, UCLA July 17, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-school

From playlist GSS2012: Deep Learning, Feature Learning

Data Assimilation on Adaptive Meshes - Sampson - Workshop 2 - CEB T3 2019

Sampson (U North Carolina in Chapel Hill, USA) / 14.11.2019 Data Assimilation on Adaptive Meshes ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter :

From playlist 2019 - T3 - The Mathematics of Climate and the Environment

An SDCA-powered inexact dual augmented Lagrangian method(...) - Obozinski - Workshop 3 - CEB T1 2019

Guillaume Obozinski (Swiss Data Science Center) / 02.04.2019 An SDCA-powered inexact dual augmented Lagrangian method for fast CRF learning I'll present an efficient dual augmented Lagrangian formulation to learn conditional random field (CRF) models. The algorithm, which can be interpr

From playlist 2019 - T1 - The Mathematics of Imaging

Assimilation of Lagrangian data - Chris Jones

PROGRAM: Data Assimilation Research Program Venue: Centre for Applicable Mathematics-TIFR and Indian Institute of Science Dates: 04 - 23 July, 2011 DESCRIPTION: Data assimilation (DA) is a powerful and versatile method for combining observational data of a system with its dynamical mod

From playlist Data Assimilation Research Program

Mathieu Laurière: Mean field type control with congestion

Abstract: The theory of mean field type control (or control of MacKean-Vlasov) aims at describing the behaviour of a large number of agents using a common feedback control and interacting through some mean field term. The solution to this type of control problem can be seen as a collaborat

From playlist Numerical Analysis and Scientific Computing

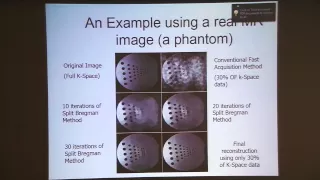

Stanley Osher: "Compressed Sensing: Recovery, Algorithms, and Analysis"

Graduate Summer School 2012: Deep Learning, Feature Learning "Compressed Sensing: Recovery, Algorithms, and Analysis" Stanley Osher, UCLA Institute for Pure and Applied Mathematics, UCLA July 20, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-schools/graduate-summe

From playlist GSS2012: Deep Learning, Feature Learning

A Microlocal Invitation to Lagrangian Fillings - Roger Casals

Joint IAS/Princeton/Montreal/Paris/Tel-Aviv Symplectic Geometry Zoominar Topic: A Microlocal Invitation to Lagrangian Fillings Speaker: Roger Casals Affiliation: University of California Davis Date: November 11, 2022 We present recent developments in symplectic geometry and explain how t

From playlist Mathematics

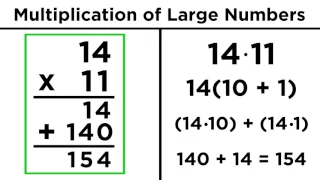

Multiplication of Large Numbers

Have you ever met someone that can multiply big numbers in their head very fast? Here's what most people don't realize. They aren't doing that thing from school in their head. They are using a much simpler approach that utilizes the distributive property. In this clip, we will learn the cl

From playlist Mathematics (All Of It)