Optimization algorithms and methods

Newton's method in optimization

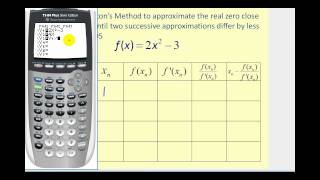

In calculus, Newton's method is an iterative method for finding the roots of a differentiable function F, which are solutions to the equation F (x) = 0. As such, Newton's method can be applied to the derivative f ′ of a twice-differentiable function f to find the roots of the derivative (solutions to f ′(x) = 0), also known as the critical points of f. These solutions may be minima, maxima, or saddle points; see section "Several variables" in Critical point (mathematics) and also section in this article. This is relevant in optimization, which aims to find (global) minima of the function f. (Wikipedia).