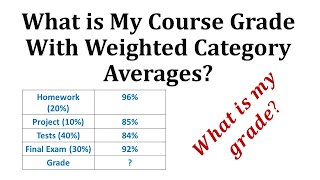

Ex: Find a Course Percentage and Grade Using a Weighted Average

This video explains how to find a percentage and grade using a weighted average based upon categories. Site: http://mathispower4u.com Blog: http://mathispower4u.com

From playlist Solving Linear Equation Application Problems

Ex: Find a Score Needed for a Specific Average

This video provides an example of how to determine a needed test score to have a specific average of 5 tests. Search Complete Library at http://www.mathispower4u.wordpress.com

From playlist Mean, Median, and Mode

If you are interested in learning more about this topic, please visit http://www.gcflearnfree.org/ to view the entire tutorial on our website. It includes instructional text, informational graphics, examples, and even interactives for you to practice and apply what you've learned.

From playlist Machine Learning

Ex: Find Grade Category Percentages and Course Grade Percentage Based on Total Points

This video explains how to find the percentage grade in different categories and the course percentage based upon total points earned. Site: http://mathispower4u.com Blog: http://mathispower4u.com

From playlist Solving Linear Equation Application Problems

What is the ROI of Going to College?

Make getting into college easier with the Checklist Program: https://bit.ly/2AYauMn As college continues to get more and more expensive each year, it can be harder and harder for potential students to determine whether attending is really worth it. On average, universities in the US rais

From playlist Concerning Questions

How to set a passing grade for a lesson

This video will show you how to only open up the rest of your course once the student gets a passing grade from one lesson.

From playlist How to create a lesson in your course

Ex: Find the Points Needed to Receive an A in a Class Based on Total Points

This video explains how to determine how many points need to be earned in order to receive an A grade in a course when the grade is based upon total points earned. Site: http://mathispower4u.com Blog: http://mathispower4u.com

From playlist Solving Linear Equation Application Problems

The Most Effective Way to Learn Mathematics

In this video we talk about how to learn mathematics effectively. Teaching yourself math can be challenging and in this video we discuss various ideas. Do you have any advice? If so, please leave a comment below. College Algebra Book: https://amzn.to/3mYftoE Math Book for Beginners: https

From playlist Book Reviews

Math – How To Get Better Fast! 3 Powerful Tips

Getting better in math can be easier than you think. Far too many students do not have effective study habits when it comes it learning math. This video will cover some simple yet powerful things you can do to get better at learning math fast. Like my teaching style? You can find math

From playlist Math Study Tips / Motivation

PyTorch LR Scheduler - Adjust The Learning Rate For Better Results

In this PyTorch Tutorial we learn how to use a Learning Rate (LR) Scheduler to adjust the LR during training. Models often benefit from this technique once learning stagnates, and you get better results. We will go over the different methods we can use and I'll show some code examples that

From playlist PyTorch Tutorials - Complete Beginner Course

Lecture 6/16 : Optimization: How to make the learning go faster

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 6A Overview of mini-batch gradient descent 6B A bag of tricks for mini-batch gradient descent 6C The momentum method 6D A separate, adaptive learning rate for each connection 6E rmsprop: Divide the gradient by a runni

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

NB: Please go to http://course.fast.ai to view this video since there is important updated information there. If you have questions, use the forums at http://forums.fast.ai You will learn more about image classification, covering several core deep learning concepts that are necessary to g

From playlist Deep Learning v2

Learning Rate in a Neural Network explained

In this video, we explain the concept of the learning rate used during training of an artificial neural network and also show how to specify the learning rate in code with Keras. 🕒🦎 VIDEO SECTIONS 🦎🕒 00:00 Welcome to DEEPLIZARD - Go to deeplizard.com for learning resources 00:30 Help dee

From playlist Deep Learning Fundamentals - Intro to Neural Networks

Learning Rate Grafting: Transferability of Optimizer Tuning (Machine Learning Research Paper Review)

#grafting #adam #sgd The last years in deep learning research have given rise to a plethora of different optimization algorithms, such as SGD, AdaGrad, Adam, LARS, LAMB, etc. which all claim to have their special peculiarities and advantages. In general, all algorithms modify two major th

From playlist Papers Explained

Live Stream #91: Session 3 of “Intelligence and Learning”

In this live stream, I introduce the concept of "machine learning" and build a simple movie recommendation engine. In honor of May the 4th (Star Wars Day), I use a dataset of ratings for the Star Wars movies to create an algorithm that predicts star ratings for movies you haven't seen yet.

From playlist Live Stream Archive

🔴 LIVE 🔴 Studying Recommender Systems (pt. 2)

Learning from Recommender Systems: The Textbook (affiliate, helps channel): https://amzn.to/3JJakdb Learning from Recommender Systems: The Textbook (non-affiliate link): https://amzn.to/3HZf4dm Might take a look different stuff too

From playlist Streams

🔴 LIVE 🔴 CAN WE BUILD A RECOMMENDER SYSTEM???

let's see what happens course looked at is machine learning specialization by andrew ng (affiliate): https://bit.ly/3hjTBBt specialization review: https://youtu.be/piBjsbwPwdk

From playlist Streams

Gradient Descent Machine Learning | Gradient Descent Algorithm | Stochastic Gradient Descent Edureka

🔥Edureka 𝐏𝐆 𝐃𝐢𝐩𝐥𝐨𝐦𝐚 𝐢𝐧 𝐀𝐈 & 𝐌𝐚𝐜𝐡𝐢𝐧𝐞 𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 from E & ICT Academy of 𝐍𝐈𝐓 𝐖𝐚𝐫𝐚𝐧𝐠𝐚𝐥 (𝐔𝐬𝐞 𝐂𝐨𝐝𝐞: 𝐘𝐎𝐔𝐓𝐔𝐁𝐄𝟐𝟎): https://www.edureka.co/executive-programs/machine-learning-and-ai This Edureka video on ' Gradient Descent Machine Learning' will give you an overview of Gradient Descent Algorithm and

From playlist Data Science Training Videos

AdaGrad Optimizer For Gradient Descent

#ml #machinelearning Learning rate optimizer

From playlist Optimizers in Machine Learning

We’ll look at each of the 5 steps in the Study Cycle, and show how they work together to improve your study habits. The steps include: -Preview -Attend class -Review -Study -Check in By going through each of these steps, you’ll be able to better prepare for your classes, and you’ll also

From playlist Fundamentals of Learning