International Organizations - 3.7 EU

European Union

From playlist Plaid Avenger: GEOG 1014 - Geography of World Regions | CosmoLearning Geography

Lecture 11 | Convex Optimization II (Stanford)

Lecture by Professor Stephen Boyd for Convex Optimization II (EE 364B) in the Stanford Electrical Engineering department. Professor Boyd lectures on Sequential Convex Programming. This course introduces topics such as subgradient, cutting-plane, and ellipsoid methods. Decentralized conv

From playlist Lecture Collection | Convex Optimization

11. Unconstrained Optimization; Newton-Raphson and Trust Region Methods

MIT 10.34 Numerical Methods Applied to Chemical Engineering, Fall 2015 View the complete course: http://ocw.mit.edu/10-34F15 Instructor: James Swan Students learned how to solve unconstrained optimization problems. In addition of the Newton-Raphson method, students also learned the steepe

From playlist MIT 10.34 Numerical Methods Applied to Chemical Engineering, Fall 2015

Optimisation - an introduction: Professor Coralia Cartis, University of Oxford

Coralia Cartis (BSc Mathematics, Babesh-Bolyai University, Romania; PhD Mathematics, University of Cambridge (2005)) has joined the Mathematical Institute at Oxford and Balliol College in 2013 as Associate Professor in Numerical Optimization. Previously, she worked as a research scientist

From playlist Data science classes

These are the Ocean's Protected Areas—and We Need More | National Geographic

The ocean faces many challenges, but has the extraordinary power to replenish when it is protected. Marine protected areas facilitate resilience and recovery for degraded areas of the ocean, and offer opportunities to rebuild stocks of commercially important species. Additionally, protec

From playlist News | National Geographic

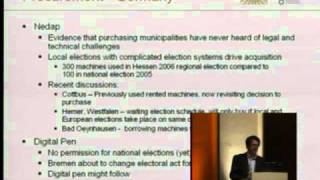

23C3: Hacking the Electoral Law

Speaker: Ulrich Wiesner How the Ministry of the Interior turns fundamental election principals into their opposite, without even asking the parliament. Public control and transparency of elections, not trust, are well established principles to prevent electoral fraud in a democracy. Wi

From playlist 23C3: Who can you trust

DDPS | Model reduction with adaptive enrichment for large scale PDE constrained optimization

Talk Abstract Projection based model order reduction has become a mature technique for simulation of large classes of parameterized systems. However, several challenges remain for problems where the solution manifold of the parameterized system cannot be well approximated by linear subspa

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

Stephen Wright: "Nonconvex optimization in matrix optimization and distributionally robust optim..."

Intersections between Control, Learning and Optimization 2020 "Nonconvex optimization in matrix optimization and distributionally robust optimization" Stephen Wright - University of Wisconsin Institute for Pure and Applied Mathematics, UCLA February 27, 2020 For more information: http:/

From playlist Intersections between Control, Learning and Optimization 2020

Katya Scheinberg: "Recent advances in Derivative-Free Optimization and its connection to reinfor..."

Machine Learning for Physics and the Physics of Learning 2019 Workshop I: From Passive to Active: Generative and Reinforcement Learning with Physics "Recent advances in Derivative-Free Optimization and its connection to reinforcement learning" Katya Scheinberg, Cornell University Abstrac

From playlist Machine Learning for Physics and the Physics of Learning 2019

Filippo Lipparini - Black-box optimization of self-consistent field wavefunction, closed/open shells

Recorded 05 May 2022. Filippo Lipparini of the Università di Pisa presents "Black-box optimization of self-consistent field wavefunction for closed and open shell molecules" at IPAM's Large-Scale Certified Numerical Methods in Quantum Mechanics Workshop. Abstract: We present the implementa

From playlist 2022 Large-Scale Certified Numerical Methods in Quantum Mechanics

MIT 14.73 The Challenge of World Poverty, Spring 2011 View the complete course: http://ocw.mit.edu/14-73S11 Instructor: Esther Duflo License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 14.73 The Challenge of World Poverty, Spring 2011

Statistics: Ch 9 Hypothesis Testing (9 of 35) What is the Critical Region? (Intuitive)

Visit http://ilectureonline.com for more math and science lectures! To donate: http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will learn the intuitive “feeling” of what we mean by the Critical Region using an example of manufacturing screws that should hav

From playlist STATISTICS CH 9 HYPOTHESIS TESTING

DDPS | Bayesian Optimization: Exploiting Machine Learning Models, Physics, & Throughput Experiments

We report new paradigms for Bayesian Optimization (BO) that enable the exploitation of large-scale machine learning models (e.g., neural nets), physical knowledge, and high-throughput experiments. Specifically, we present a paradigm that decomposes the performance function into a reference

From playlist Data-driven Physical Simulations (DDPS) Seminar Series