High dimensional estimation via Sum-of-Squares Proofs – D. Steurer & P. Raghavendra – ICM2018

Mathematical Aspects of Computer Science Invited Lecture 14.6 High dimensional estimation via Sum-of-Squares Proofs David Steurer & Prasad Raghavendra Abstract: Estimation is the computational task of recovering a ‘hidden parameter’ x associated with a distribution 𝒟_x, given a ‘measurem

From playlist Mathematical Aspects of Computer Science

How Pascal's Triangle Help us to Discover Summation Formula

This is part 1 of the series on how to find summation formulas for common sums. We start with the easiest possible case where we sum polynomials, also exposing you to some ideas of induction.

From playlist Summer of Math Exposition Youtube Videos

How to use left hand riemann sums from a table

👉 Learn how to approximate the integral of a function using the Reimann sum approximation. Reimann sum is an approximation of the area under a curve or between two curves by dividing it into multiple simple shapes like rectangles and trapezoids. In using the Reimann sum to approximate the

From playlist The Integral

How to use left hand riemann sum approximation

👉 Learn how to approximate the integral of a function using the Reimann sum approximation. Reimann sum is an approximation of the area under a curve or between two curves by dividing it into multiple simple shapes like rectangles and trapezoids. In using the Reimann sum to approximate the

From playlist The Integral

Least squares method for simple linear regression

In this video I show you how to derive the equations for the coefficients of the simple linear regression line. The least squares method for the simple linear regression line, requires the calculation of the intercept and the slope, commonly written as beta-sub-zero and beta-sub-one. Deriv

From playlist Machine learning

How to use right hand riemann sum give a table

👉 Learn how to approximate the integral of a function using the Reimann sum approximation. Reimann sum is an approximation of the area under a curve or between two curves by dividing it into multiple simple shapes like rectangles and trapezoids. In using the Reimann sum to approximate the

From playlist The Integral

Solve equation using the sum and difference formulas

👉 Learn how to solve equations using the angles sum and difference identities. Using the angles sum and difference identities, we are able to expand the trigonometric expressions, thereby obtaining the values of the non-variable terms. The variable terms are easily simplified by combining

From playlist Sum and Difference Formulas

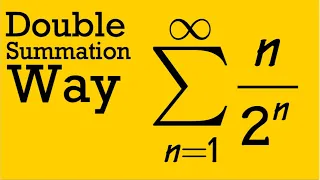

series of n/2^n as a double summation

We will evaluate the infinite series of n/2^n by using the double summation technique. Thanks to Johannes for the solution. Summation by parts approach by Michael Penn: https://youtu.be/mNIsJ0MgdmU Subscribe for more math for fun videos 👉 https://bit.ly/3o2fMNo 💪 Support this channe

From playlist Sum, math for fun

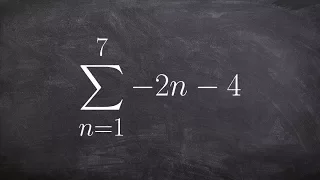

Learn to use summation notation for an arithmetic series to find the sum

👉 Learn how to find the partial sum of an arithmetic series. A series is the sum of the terms of a sequence. An arithmetic series is the sum of the terms of an arithmetic sequence. The formula for the sum of n terms of an arithmetic sequence is given by Sn = n/2 [2a + (n - 1)d], where a is

From playlist Series

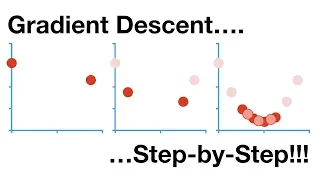

Gradient Descent, Step-by-Step

Gradient Descent is the workhorse behind most of Machine Learning. When you fit a machine learning method to a training dataset, you're probably using Gradient Descent. It can optimize parameters in a wide variety of settings. Since it's so fundamental to Machine Learning, I decided to mak

From playlist Optimizers in Machine Learning

Backpropagation Details Pt. 1: Optimizing 3 parameters simultaneously.

The main ideas behind Backpropagation are super simple, but there are tons of details when it comes time to implementing it. This video shows how to optimize three parameters in a Neural Network simultaneously and introduces some Fancy Notation. NOTE: This StatQuest assumes that you alrea

From playlist StatQuest

Karl Bringmann: Pseudopolynomial-time Algorithms for Optimization Problems

Fine-grained complexity theory is the area of theoretical computer science that proves conditional lower bounds based on conjectures such as the Strong Exponential Time Hypothesis. This enables the design of "best-possible" algorithms, where the running time of the algorithm matches a cond

From playlist Workshop: Parametrized complexity and discrete optimization

Seminar on Applied Geometry and Algebra (SIAM SAGA): Timo de Wolff

Date: Tuesday, March 9 at 11:00am EST (5:00pm CET) Speaker: Timo de Wolff, Technische Universität Braunschweig Title: Certificates of Nonnegativity and Their Applications in Theoretical Computer Science Abstract: Certifying nonnegativity of real, multivariate polynomials is a key proble

From playlist Seminar on Applied Geometry and Algebra (SIAM SAGA)

Neural Networks Pt. 2: Backpropagation Main Ideas

Backpropagation is the method we use to optimize parameters in a Neural Network. The ideas behind backpropagation are quite simple, but there are tons of details. This StatQuest focuses on explaining the main ideas in a way that is easy to understand. NOTE: This StatQuest assumes that you

From playlist StatQuest

Nonlinear algebra, Lecture 11: "Semidefinite Programming", by Bernd Sturmfels

This is the eleventh lecture in the IMPRS Ringvorlesung, the advanced graduate course at the Max Planck Institute for Mathematics in the Sciences.

From playlist IMPRS Ringvorlesung - Introduction to Nonlinear Algebra

2. Bentley Rules for Optimizing Work

MIT 6.172 Performance Engineering of Software Systems, Fall 2018 Instructor: Julian Shun View the complete course: https://ocw.mit.edu/6-172F18 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP63VIBQVWguXxZZi0566y7Wf Prof. Shun discusses Bentley Rules for optimizing work

From playlist MIT 6.172 Performance Engineering of Software Systems, Fall 2018

Sums of Squares and Golden Gates - Peter Sarnak (Princeton)

Through the works of Fermat, Gauss, and Lagrange, we understand which positive integers can be represented as sums of two, three, or four squares. Hilbert's 11th problem, from 1900, extends this question to more general quadratic equations. While much progress has been made since its formu

From playlist Mathematics Research Center

Find two numbers whose products is -16 and the sum of whose squares is a minimum. Practice this yourself on Khan Academy right now: https://www.khanacademy.org/e/optimization?utm_source=YTdescription&utm_medium=YTdescription&utm_campaign=YTdescription

From playlist Calculus

Ridge vs Lasso Regression, Visualized!!!

People often ask why Lasso Regression can make parameter values equal 0, but Ridge Regression can not. This StatQuest shows you why. NOTE: This StatQuest assumes that you are already familiar with Ridge and Lasso Regression. If not, check out the 'Quests. Ridge: https://youtu.be/Q81RR3yKn

From playlist StatQuest

Learning to solve an equation by using the sum and difference formulas

👉 Learn how to solve equations using the angles sum and difference identities. Using the angles sum and difference identities, we are able to expand the trigonometric expressions, thereby obtaining the values of the non-variable terms. The variable terms are easily simplified by combining

From playlist Sum and Difference Formulas