Stochastic Approximation-based algorithms, when the Monte (...) - Fort - Workshop 2 - CEB T1 2019

Gersende Fort (CNRS, Univ. Toulouse) / 13.03.2019 Stochastic Approximation-based algorithms, when the Monte Carlo bias does not vanish. Stochastic Approximation algorithms, whose stochastic gradient descent methods with decreasing stepsize are an example, are iterative methods to comput

From playlist 2019 - T1 - The Mathematics of Imaging

Jana Cslovjecsek: Efficient algorithms for multistage stochastic integer programming using proximity

We consider the problem of solving integer programs of the form min {c^T x : Ax = b; x geq 0}, where A is a multistage stochastic matrix. We give an algorithm that solves this problem in fixed-parameter time f(d; ||A||_infty) n log^O(2d) n, where f is a computable function, d is the treed

From playlist Workshop: Parametrized complexity and discrete optimization

Numeric Modeling in Mathematica: Q&A with Mathematica Experts

Mathematica experts answer user-submitted questions about numeric computations during Mathematica Experts Live: Numeric Modeling in Mathematica. For more information about Mathematica, please visit: http://www.wolfram.com/mathematica

From playlist Mathematica Experts Live: Numeric Modeling in Mathematica

Anthony Nouy: Adaptive low-rank approximations for stochastic and parametric equations [...]

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Numerical Analysis and Scientific Computing

Basic stochastic simulation b: Stochastic simulation algorithm

(C) 2012-2013 David Liao (lookatphysics.com) CC-BY-SA Specify system Determine duration until next event Exponentially distributed waiting times Determine what kind of reaction next event will be For more information, please search the internet for "stochastic simulation algorithm" or "kin

From playlist Probability, statistics, and stochastic processes

Approximating Functions in a Metric Space

Approximations are common in many areas of mathematics from Taylor series to machine learning. In this video, we will define what is meant by a best approximation and prove that a best approximation exists in a metric space. Chapters 0:00 - Examples of Approximation 0:46 - Best Aproximati

From playlist Approximation Theory

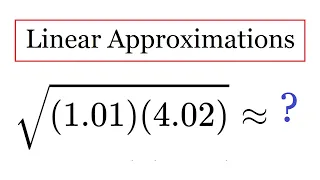

Linear Approximations and Differentials

Linear Approximation In this video, I explain the concept of a linear approximation, which is just a way of approximating a function of several variables by its tangent planes, and I illustrate this by approximating complicated numbers f without using a calculator. Enjoy! Subscribe to my

From playlist Partial Derivatives

Francis Bach: Large-scale machine learning and convex optimization 2/2

Abstract: Many machine learning and signal processing problems are traditionally cast as convex optimization problems. A common difficulty in solving these problems is the size of the data, where there are many observations ("large n") and each of these is large ("large p"). In this settin

From playlist Probability and Statistics

Elias Khalil - Neur2SP: Neural Two-Stage Stochastic Programming - IPAM at UCLA

Recorded 02 March 2023. Elias Khalil of the University of Toronto presents "Neur2SP: Neural Two-Stage Stochastic Programming" at IPAM's Artificial Intelligence and Discrete Optimization Workshop. Abstract: Stochastic Programming is a powerful modeling framework for decision-making under un

From playlist 2023 Artificial Intelligence and Discrete Optimization

Jorge Nocedal: "Tutorial on Optimization Methods for Machine Learning, Pt. 3"

Graduate Summer School 2012: Deep Learning, Feature Learning "Tutorial on Optimization Methods for Machine Learning, Pt. 3" Jorge Nocedal, Northwestern University Institute for Pure and Applied Mathematics, UCLA July 18, 2012 For more information: https://www.ipam.ucla.edu/programs/summ

From playlist GSS2012: Deep Learning, Feature Learning

FinMath L2-2: The general Ito integral 2

Welcome to the second part of lesson 2. In this video we discuss some properties of the (general) Ito integral and introduce the necessary notions to deal with the Ito-Doeblin formula, which will be treated in Lesson 3. Topics: 00:00 A little exercise for you 06:46 Definition of the integ

From playlist Financial Mathematics

Dr Lukasz Szpruch, University of Edinburgh

Bio I am a Lecturer at the School of Mathematics, University of Edinburgh. Before moving to Scotland I was a Nomura Junior Research Fellow at the Institute of Mathematics, University of Oxford, and a member of Oxford-Man Institute for Quantitative Finance. I hold a Ph.D. in mathematics fr

From playlist Short Talks

DeepMind x UCL | Deep Learning Lectures | 5/12 | Optimization for Machine Learning

Optimization methods are the engines underlying neural networks that enable them to learn from data. In this lecture, DeepMind Research Scientist James Martens covers the fundamentals of gradient-based optimization methods, and their application to training neural networks. Major topics in

From playlist Learning resources

Stochastic Gradient Descent: where optimization meets machine learning- Rachel Ward

2022 Program for Women and Mathematics: The Mathematics of Machine Learning Topic: Stochastic Gradient Descent: where optimization meets machine learning Speaker: Rachel Ward Affiliation: University of Texas, Austin Date: May 26, 2022 Stochastic Gradient Descent (SGD) is the de facto op

From playlist Mathematics

Introduction to the paper https://arxiv.org/abs/2002.06707

From playlist Research

Stochastic processes by VijayKumar Krishnamurthy

Winter School on Quantitative Systems Biology DATE: 04 December 2017 to 22 December 2017 VENUE: Ramanujan Lecture Hall, ICTS, Bengaluru The International Centre for Theoretical Sciences (ICTS) and the Abdus Salam International Centre for Theoretical Physics (ICTP), are organizing a Wint

From playlist Winter School on Quantitative Systems Biology

Deep Learning 5: Optimization for Machine Learning

James Martens, Research Scientist, discusses optimization for machine learning as part of the Advanced Deep Learning & Reinforcement Learning Lectures.

From playlist Learning resources

Francis Bach : Large-scale machine learning and convex optimization 1/2

Abstract: Many machine learning and signal processing problems are traditionally cast as convex optimization problems. A common difficulty in solving these problems is the size of the data, where there are many observations ("large n") and each of these is large ("large p"). In this settin

From playlist Probability and Statistics

Peter Kratz : Large deviations for Poisson driven processes in epidemiology

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Probability and Statistics