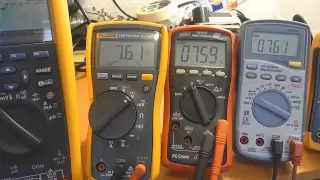

Multimeter Review / DMM Review / buyers guide / tutorial

A list of my multimeters can be purchased here: http://astore.amazon.com/m0711-20?_encoding=UTF8&node=5 In this video I do a review of several digital multimeters. I compare features and functionality. I explain safety features, number of digits, display count, accuracy and resolution. Th

From playlist Multimeter reviews, buyers guide and comparisons.

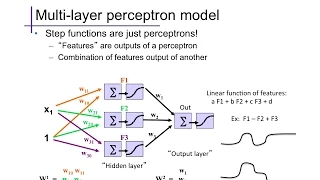

The basic form of a feed-forward multi-layer perceptron / neural network; example activation functions.

From playlist cs273a

Multilayer Neural Networks - Part 2: Feedforward Neural Networks

This video is about Multilayer Neural Networks - Part 2: Feedforward Neural Networks Abstract: This is a series of video about multi-layer neural networks, which will walk through the introduction, the architecture of feedforward fully-connected neural network and its working principle, t

From playlist Neural Networks

Solar panel performance shoot-out - Part 3

This is a performance test between two 55 watt solar panels, one is a monocrystalline and the other is an Amorphous / thin film panel.

From playlist Solar Panel Reviews, Testing and Experiments

Solar panel performance shoot-out - Part 4

A little update on the performance test between two 55 watt solar panels, one is a monocrystalline and the other is an Amorphous / thin film panel.

From playlist Solar Panel Reviews, Testing and Experiments

Multimeter Calibration - Some future testing..

A list of my multimeters can be purchased here: http://astore.amazon.com/m0711-20?_encoding=UTF8&node=5 The calibration of my Major Tech MT22 raised some interesting responses so we'll take this topic a little further. I have ordered a DMMCheck and PentaRef from Voltagestandard.com.

From playlist Multimeter reviews, buyers guide and comparisons.

Deep Learning Full Course - Learn Deep Learning in 6 Hours | Deep Learning Tutorial | Edureka

** AI & Deep Learning with TensorFlow (Use Code: YOUTUBE20): https://www.edureka.co/ai-deep-learning-with-tensorflow ** This Edureka Deep Learning Full Course video will help you understand and learn Deep Learning & Tensorflow in detail. This Deep Learning Tutorial is ideal for both beginn

From playlist Deep Learning With TensorFlow Videos

Solar panel performance shoot-out - Part 1

This is a performance test between two 55 watt solar panels, one is a monocrystalline (correction from what I said in the video) and the other is an Amorphous / thin film panel.

From playlist Solar Panel Reviews, Testing and Experiments

Deep Belief Nets - Ep. 7 (Deep Learning SIMPLIFIED)

An RBM can extract features and reconstruct input data, but it still lacks the ability to combat the vanishing gradient. However, through a clever combination of several stacked RBMs and a classifier, you can form a neural net that can solve the problem. This net is known as a Deep Belief

From playlist Deep Learning SIMPLIFIED

10.5: Neural Networks: Multilayer Perceptron Part 2 - The Nature of Code

This video follows up on the previous Multilayer Perceptron video (https://youtu.be/u5GAVdLQyIg). Here I begin the long process of coding a simple neural network library in JavaScript. Next video: https://youtu.be/uSzGdfdOoG8 This video is part of Chapter 10 of The Nature of Code (http:/

From playlist Session 4 - Neural Networks - Intelligence and Learning

Solar panel performance shoot-out - Part 2

This is a performance test between two 55 watt solar panels, one is a mono-crystalline and the other is an Amorphous / thin film panel.

From playlist Solar Panel Reviews, Testing and Experiments

H2O.ai - Ep. 14 (Deep Learning SIMPLIFIED)

H2O.ai is a software platform that offers a host of machine learning algorithms, as well as one deep net model. It also provides sophisticated data munging, an intuitive UI, and several built-in enhancements for handling data. However, the tools must be run on your own hardware. Deep Lea

From playlist Deep Learning SIMPLIFIED

Deep Learning Lecture 3.1 - Greetings

Welcome to the Deep Learning Lecture on Multilayer Perceptrons

From playlist Deep Learning Lecture

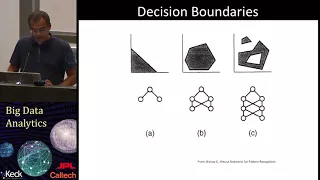

Neural Networks - A biologically inspired model. The efficient backpropagation learning algorithm. Hidden layers. Lecture 10 of 18 of Caltech's Machine Learning Course - CS 156 by Professor Yaser Abu-Mostafa. View course materials in iTunes U Course App - https://itunes.apple.com/us/course

From playlist Machine Learning Course - CS 156

10.4: Neural Networks: Multilayer Perceptron Part 1 - The Nature of Code

In this video, I move beyond the Simple Perceptron and discuss what happens when you build multiple layers of interconnected perceptrons ("fully-connected network") for machine learning. Next video: https://youtu.be/IlmNhFxre0w This video is part of Chapter 10 of The Nature of Code (http

From playlist Session 4 - Neural Networks - Intelligence and Learning

What is a Neural Network - Ep. 2 (Deep Learning SIMPLIFIED)

With plenty of machine learning tools currently available, why would you ever choose an artificial neural network over all the rest? This clip and the next could open your eyes to their awesome capabilities! You'll get a closer look at neural nets without any of the math or code - just wha

From playlist Deep Learning SIMPLIFIED

CS224W: Machine Learning with Graphs | 2021 | Lecture 6.2 - Basics of Deep Learning

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3Cim9k7 Jure Leskovec Computer Science, PhD In this lecture, we give a review of deep learning concepts and techniques that are essential for understanding graph n

From playlist Stanford CS224W: Machine Learning with Graphs

Multilayer Perceptron with TensorFlow - Deep Learning with Tensorflow

Enroll in the course for free at: https://bigdatauniversity.com/courses/deep-learning-tensorflow/ Deep Learning with TensorFlow Introduction The majority of data in the world is unlabeled and unstructured. Shallow neural networks cannot easily capture relevant structure in, for instance,

From playlist Deep Learning with Tensorflow

Electronic measurement equipment and multimeters - Part 1

In this video series I show different measurement equipment (multimeters, etc) and why / how I use them. In later videos I'll explore different features and highlight pro's and con's. A list of my multimeters can be purchased here: http://astore.amazon.com/m0711-20?_encoding=UTF8&node=

From playlist Electronic Measurement Equipment