Decision trees | Classification algorithms

Information gain (decision tree)

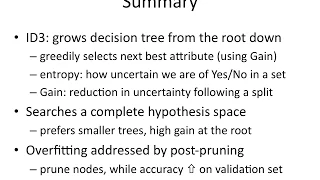

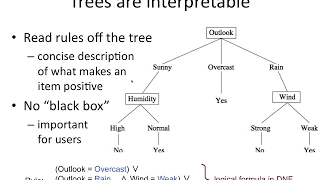

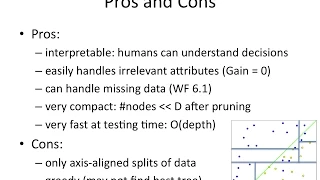

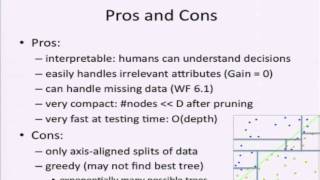

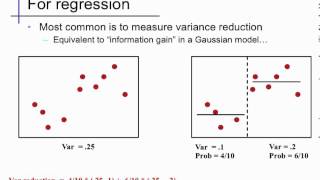

In information theory and machine learning, information gain is a synonym for Kullback–Leibler divergence; the amount of information gained about a random variable or signal from observing another random variable. However, in the context of decision trees, the term is sometimes used synonymously with mutual information, which is the conditional expected value of the Kullback–Leibler divergence of the univariate probability distribution of one variable from the conditional distribution of this variable given the other one. The information gain of a random variable X obtained from an observation of a random variable A taking value is defined the Kullback–Leibler divergence of the prior distribution for x from the posterior distribution for x given a. The expected value of the information gain is the mutual information of X and A – i.e. the reduction in the entropy of X achieved by learning the state of the random variable A. In machine learning, this concept can be used to define a preferred sequence of attributes to investigate to most rapidly narrow down the state of X. Such a sequence (which depends on the outcome of the investigation of previous attributes at each stage) is called a decision tree and applied in the area of machine learning known as decision tree learning. Usually an attribute with high mutual information should be preferred to other attributes. (Wikipedia).