(ML 13.6) Graphical model for Bayesian linear regression

As an example, we write down the graphical model for Bayesian linear regression. We introduce the "plate notation", and the convention of shading random variables which are being conditioned on.

From playlist Machine Learning

The mother of all representer theorems for inverse problems & machine learning - Michael Unser

This workshop - organised under the auspices of the Isaac Newton Institute on “Approximation, sampling and compression in data science” — brings together leading researchers in the general fields of mathematics, statistics, computer science and engineering. About the event The workshop ai

From playlist Mathematics of data: Structured representations for sensing, approximation and learning

(ML 7.1) Bayesian inference - A simple example

Illustration of the main idea of Bayesian inference, in the simple case of a univariate Gaussian with a Gaussian prior on the mean (and known variances).

From playlist Machine Learning

Machine learning describes computer systems that are able to automatically perform tasks based on data. A machine learning system takes data as input and produces an approach or solution to a task as output, without the need for human intervention. Machine learning is closely tied to th

From playlist Data Science Dictionary

09b Machine Learning: Linear Regression

Lecture on linear regression as an introduction to machine learning prediction. Includes derivation, prediction and confidence intervals, testing model parameters etc. We start simple and build from these concepts to much more complicated machines! Follow along with the demonstration work

From playlist Machine Learning

(ML 13.7) Graphical model for Bayesian Naive Bayes

As an example, we write down the graphical model for Bayesian naïve Bayes.

From playlist Machine Learning

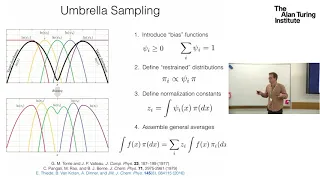

Jonathan Weare (DDMCS@Turing): Stratification for Markov Chain Monte Carlo

Complex models in all areas of science and engineering, and in the social sciences, must be reduced to a relatively small number of variables for practical computation and accurate prediction. In general, it is difficult to identify and parameterize the crucial features that must be incorp

From playlist Data driven modelling of complex systems

Definition of conjugate priors, and a couple of examples. For more detailed examples, see the videos on the Beta-Bernoulli model, the Dirichlet-Categorical model, and the posterior distribution of a univariate Gaussian.

From playlist Machine Learning

Introduction to Classification Models

Ever wonder what classification models do? In this quick introduction, we talk about what classifications models are, as well as what they are used for in machine learning. In machine learning there are many different types of models, all with different types of outcomes. When it comes t

From playlist Introduction to Machine Learning

COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology (IRS, NASA)

The origin of the IBM System/360 mainframe computer family, IBM’s most successful computer product line, and one of the most influential computer system architectures of the twentieth century. IBM invested $5 billion in resources to develop the new architecture and multiple system models.

From playlist Computer History: Early IBM computers 1944 to 1970's

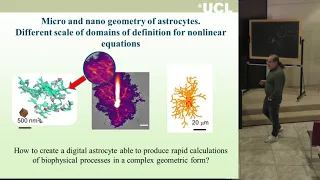

Multiscale modeling and simulations to bridge molecular... - 5 October 2018

http://www.crm.sns.it/event/422/ Multiscale modeling and simulations to bridge molecular and cellular scales Predicting cellular behavior from molecular level remains a key issue in systems and computational biology due to the large complexity encountered in biological systems: large num

From playlist Centro di Ricerca Matematica Ennio De Giorgi

DDPS | Learning hierarchies of reduced-dimension and context-aware models for Monte Carlo sampling

In this DDPS Seminar Series talk from Sept. 2, 2021, University of Texas at Austin postdoctoral fellow Ionut-Gabriel Farcas discusses hierarchies of reduced-dimension and context-aware low-fidelity models for multi-fidelity Monte Carlo sampling. Description: In traditional model reduction

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

Selecting the BEST Regression Model (Part D)

Regression Analysis by Dr. Soumen Maity,Department of Mathematics,IIT Kharagpur.For more details on NPTEL visit http://nptel.ac.in

From playlist IIT Kharagpur: Regression Analysis | CosmoLearning.org Mathematics

DeepMind x UCL | Deep Learning Lectures | 11/12 | Modern Latent Variable Models

This lecture, by DeepMind Research Scientist Andriy Mnih, explores latent variable models, a powerful and flexible framework for generative modelling. After introducing this framework along with the concept of inference, which is central to it, Andriy focuses on two types of modern latent

From playlist Learning resources

Stanford CS224N NLP with Deep Learning | Spring 2022 | Guest Lecture: Scaling Language Models

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/3w46jar To learn more about this course visit: https://online.stanford.edu/courses/cs224n-natural-language-processing-deep-learning To follow along with the course

From playlist Stanford CS224N: Natural Language Processing with Deep Learning | Winter 2021

CS25 I Stanford Seminar - Mixture of Experts (MoE) paradigm and the Switch Transformer

In deep learning, models typically reuse the same parameters for all inputs. Mixture of Experts (MoE) defies this and instead selects different parameters for each incoming example. The result is a sparsely-activated model -- with outrageous numbers of parameters -- but a constant computat

From playlist Stanford Seminars

Stanford CS105: Introduction to Computers | 2021 | Lecture 26.1 - Cloud Computing

Patrick Young Computer Science, PhD This course is a survey of Internet technology and the basics of computer hardware. You will learn what computers are and how they work and gain practical experience in the development of websites and an introduction to programming. To follow along wi

From playlist Stanford CS105 - Introduction to Computers Full Course

Ruslan Salakhutdinov: "Learning Hierarchical Generative Models, Pt. 1"

Graduate Summer School 2012: Deep Learning, Feature Learning "Learning Hierarchical Generative Models, Pt. 1" Ruslan Salakhutdinov, University of Toronto Institute for Pure and Applied Mathematics, UCLA July 23, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-school

From playlist GSS2012: Deep Learning, Feature Learning

Stanford Seminar - Distributed Perception and Learning Between Robots and the Cloud

Sandeep Chinchali Stanford University January 10, 2020 Today’s robotic fleets are increasingly facing two coupled challenges. First, they are measuring growing volumes of high-bitrate video and LIDAR sensory streams, which, second, requires them to use increasingly compute-intensive model

From playlist Stanford AA289 - Robotics and Autonomous Systems Seminar

Natural Models of Type Theory - Steve Awodey

Steve Awodey Carnegie Mellon University; Member, School of Mathematics March 28, 2013 For more videos, visit http://video.ias.edu

From playlist Mathematics