Prob & Stats - Markov Chains (20 of 38) Absorbing Markov Chains - Definition 2

Visit http://ilectureonline.com for more math and science lectures! In this video I will define the absorbing Markov in a nxn matrix and 3x3 matrix. Next video in the Markov Chains series: http://youtu.be/cZKAVOEWcrg

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

(ML 14.3) Markov chains (discrete-time) (part 2)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Markov Chains - Part 7 - Absorbing Markov Chains and Absorbing States

Thanks to all of you who support me on Patreon. You da real mvps! $1 per month helps!! :) https://www.patreon.com/patrickjmt !! Markov Chains - Part 7 - Absorbing Markov Chains and Absorbing States. In this video, I introduce the idea of an absorbing state and an absorbing Markov ch

From playlist All Videos - Part 1

Matrix Limits and Markov Chains

In this video I present a cool application of linear algebra in which I use diagonalization to calculate the eventual outcome of a mixing problem. This process is a simple example of what's called a Markov chain. Note: I just got a new tripod and am still experimenting with it; sorry if t

From playlist Eigenvalues

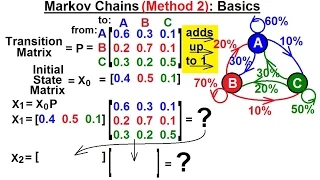

Prob & Stats - Markov Chains: Method 2 (30 of 38) Basics***

Visit http://ilectureonline.com for more math and science lectures! In this video I will demonstrate the basics of method 2 of solving Markov chain problems. Next video in the Markov Chains series:

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

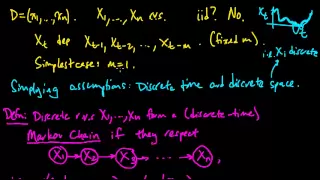

(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Prob & Stats - Markov Chains (5 of 38) What Happens if the Markov Chain Continues?

Visit http://ilectureonline.com for more math and science lectures! In this video I will show what happens when the Markov chain is allowed to continued to the nth state. Next video in the Markov Chains series: http://youtu.be/xgvgN4fUqcs

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Prob & Stats - Markov Chains (23 of 38) Absorbing and Non-Absorbing Markov Chain

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain the difference between an absorbing and non-absorbing Markov chain. Next video in the Markov Chains series: http://youtu.be/UuZU3LUBalQ

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Research talks by Nisheeth Vishno

Second Bangalore School on Population Genetics and Evolution URL: http://www.icts.res.in/program/popgen2016 DESCRIPTION: Just as evolution is central to our understanding of biology, population genetics theory provides the basic framework to comprehend evolutionary processes. Population

From playlist Second Bangalore School on Population Genetics and Evolution

Regularized Functional Inequalities and Applications to Markov Chains by Pierre Youssef

PROGRAM: TOPICS IN HIGH DIMENSIONAL PROBABILITY ORGANIZERS: Anirban Basak (ICTS-TIFR, India) and Riddhipratim Basu (ICTS-TIFR, India) DATE & TIME: 02 January 2023 to 13 January 2023 VENUE: Ramanujan Lecture Hall This program will focus on several interconnected themes in modern probab

From playlist TOPICS IN HIGH DIMENSIONAL PROBABILITY

Dana Randall: Sampling algorithms and phase transitions

Markov chain Monte Carlo methods have become ubiquitous across science and engineering to model dynamics and explore large combinatorial sets. Over the last 20 years there have been tremendous advances in the design and analysis of efficient sampling algorithms for this purpose. One of the

From playlist Probability and Statistics

Localization schemes: A framework for proving mixing bounds for Markov chains - Ronen Eldan

Computer Science/Discrete Mathematics Seminar II Topic: Localization schemes: A framework for proving mixing bounds for Markov chains Speaker: Ronen Eldan Affiliation: von Neumann Fellow, School of Mathematics Date: March 15, 2022 Two recent and seemingly-unrelated techniques for proving

From playlist Mathematics

AQC 2016 - Simulated Quantum Annealing Can Be Exponentially Faster Than Classical

A Google TechTalk, June 27, 2016, presented by Elizabeth Crosson (Caltech) ABSTRACT: Simulated Quantum Annealing Can Be Exponentially Faster Than Classical Simulated Annealing: Cost functions with thin, high energy barriers can exhibit exponential separations between the run-time of class

From playlist Adiabatic Quantum Computing Conference 2016

Statistical Rethinking - Lecture 11

Lecture 11 - Markov chain Monte Carlo - Statistical Rethinking: A Bayesian Course with R Examples

From playlist Statistical Rethinking Winter 2015

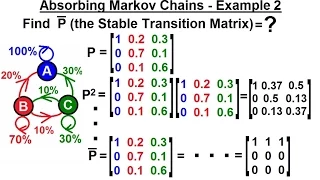

Prob & Stats - Markov Chains (22 of 38) Absorbing Markov Chains - Example 2

Visit http://ilectureonline.com for more math and science lectures! In this video I will find the stable transition matrix in an absorbing Markov chain. Next video in the Markov Chains series: http://youtu.be/hMceS_HIcKY

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Feng Liang (Cornell) -- Exact sampling and fast mixing of Activated Random Walk

Activated Random Walk (ARW) is an interacting particle system on the d-dimensional lattice Z^d. On a finite subset V⊂Z^d it defines a Markov chain on {0,1}V. We prove that when V is a Euclidean ball intersected with Z^d, the mixing time of the ARW Markov chain is at most 1+o(1) times the v

From playlist Northeastern Probability Seminar 2021

Perla Sousi: Random walks on dynamical percolation

Abstract: We study the behaviour of random walk on dynamical percolation. In this model, the edges of a graph are either open or closed and refresh their status at rate μ, while at the same time a random walker moves on G at rate 1, but only along edges which are open. On the d-dimensional

From playlist Probability and Statistics

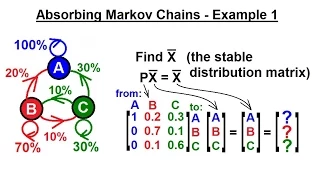

Prob & Stats - Markov Chains (21 of 38) Absorbing Markov Chains - Example 1

Visit http://ilectureonline.com for more math and science lectures! In this video I will find the stable distribution matrix in an absorbing Markov chain. Next video in the Markov Chains series: http://youtu.be/1bErNmzD8Sw

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Persi Diaconis - From Shuffling Cards to Walking Around the Building [ICM 1998]

ICM Berlin Videos 27.08.1998 From Shuffling Cards to Walking Around the Building Persi Diaconis Mathematics and ORIE, Cornell University, Ithaca, USA: Statistics, Probability, Algebraic Combinatorics Thu 27-Aug-98 · 14:00-15:00 h Abastract: https://www.mathunion.org/fileadmin/IMU/Video

From playlist Number Theory