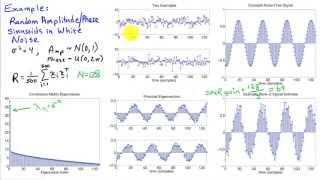

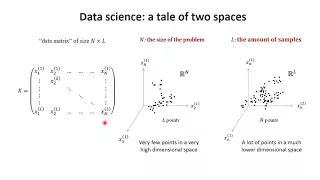

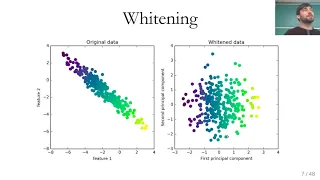

Machine learning algorithms | Dimension reduction | Signal processing

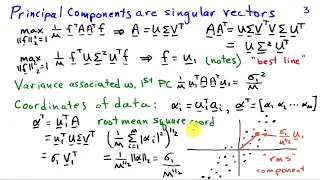

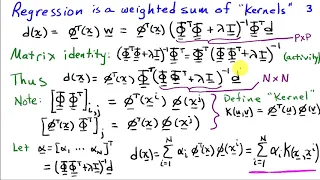

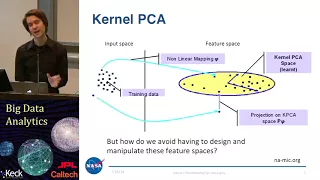

Kernel principal component analysis

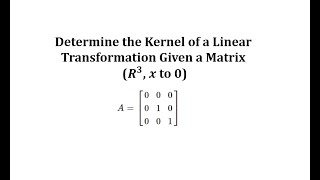

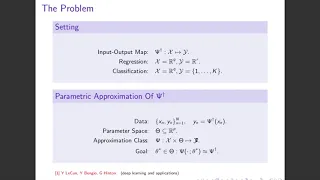

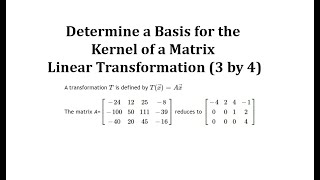

In the field of multivariate statistics, kernel principal component analysis (kernel PCA)is an extension of principal component analysis (PCA) using techniques of kernel methods. Using a kernel, the originally linear operations of PCA are performed in a reproducing kernel Hilbert space. (Wikipedia).