Artificial neural networks | Graph algorithms

Graph neural network

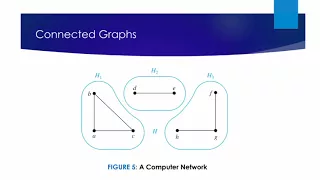

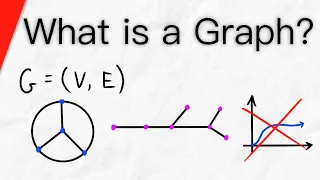

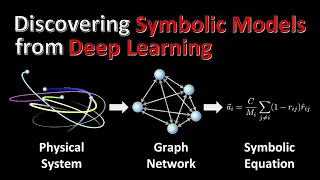

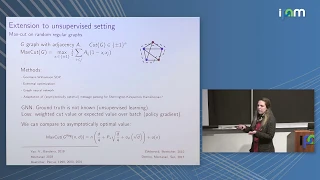

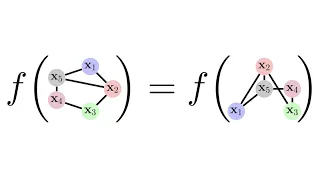

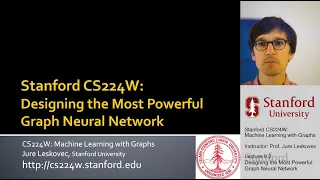

A Graph neural network (GNN) is a class of artificial neural networks for processing data that can be represented as graphs. In the more general subject of "Geometric Deep Learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. Convolutional neural networks, in the context of computer vision, can be seen as a GNN applied to graphs structured as grids of pixels. Transformers, in the context of natural language processing, can be seen as GNNs applied to complete graphs whose nodes are words in a sentence. The key design element of GNNs is the use of pairwise message passing, such that graph nodes iteratively update their representations by exchanging information with their neighbors. Since their inception, several different GNN architectures have been proposed, which implement different flavors of message passing. As of 2022, whether it is possible to define GNN architectures "going beyond" message passing, or if every GNN can be built on message passing over suitably defined graphs, is an open research question. Relevant application domains for GNNs include social networks, citation networks, molecular biology, chemistry, physics andNP-hard combinatorial optimization problems. Several open source libraries implementing graph neural networks are available, such as PyTorch Geometric (PyTorch), TensorFlow GNN (TensorFlow), and jraph (Google JAX). (Wikipedia).