Relevance model 1: Bernoulli sets vs. multinomial urns

[http://bit.ly/RModel] Relevance model is the language model of the relevant class. In this video we look at the difference between the multinomial model (the one used in relevance models) and the multiple-Bernoulli model, which forms the basis for the classical probabilistic models.

From playlist IR18 Relevance Model

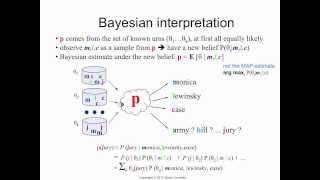

Relevance model 4: Bayesian interpretation

[http://bit.ly/RModel] Another way to interpret the relevance model is via Bayesian estimation: the relevance model could be one of a large set of urns. We know what the urns are, but don't know which one is correct, so we compute the posterior probability for each candidate urn, and combi

From playlist IR18 Relevance Model

Relevance model 5: summary of assumptions

[http://bit.ly/RModel] The relevance model ranking is based on the probability ranking principle (PRP). It uses the background (corpus) model as a language model for the non-relevant class (just like the classical model), but has a novel estimate for the relevance model. The estimate is ba

From playlist IR18 Relevance Model

A short refresher on vectors. Before I introduce vector-based functions, it's important to look at vectors themselves and how they are represented in python™ and the IPython Notebook using SymPy.

From playlist Life Science Math: Vectors

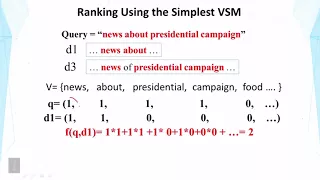

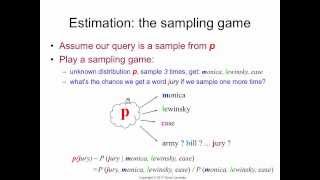

Relevance model 2: the sampling game

[http://bit.ly/RModel] How can we estimate the language model of the relevant class if we have no examples of relevant documents? We play a sampling game as follows. The relevance model is an urn with unknown parameters. We draw several samples from it and observe the query. What is the pr

From playlist IR18 Relevance Model

PRF 10: relevance feedback (Rocchio)

Relevance feedback is a powerful mechanism for dealing with the problem of linguistic ambiguity. We overview the Rocchio algorithm for relevance feedback -- a simple algorithm that can be very effective with the right parameter settings.

From playlist Relevance Feedback

15.5: Lagrange Multipliers Example - Valuable Vector Calculus

Explanation of Lagrange multipliers: https://youtu.be/bmTiH4s_mYs An example of the actual problem-solving techniques to find maximum and minimum values of a function with a constraint using Lagrange multipliers. Full Valuable Vector Calculus playlist: https://www.youtube.com/playlist?li

From playlist Valuable Vector Calculus

Linear Algebra for Computer Scientists. 1. Introducing Vectors

This computer science video is one of a series on linear algebra for computer scientists. This video introduces the concept of a vector. A vector is essentially a list of numbers that can be represented with an array or a function. Vectors are used for data analysis in a wide range of f

From playlist Linear Algebra for Computer Scientists

Searching Freely: Using GPL for Semantic Search ft. Nils Reimers

We’re excited to host Nils Reimers, creator of SBERT and researcher at HuggingFace, to talk about the latest advances in semantic search. This time, he’ll be demonstrating the power of training sentence transformer models using Generative Pseudo-Labeling (GPL), and how this can enable sema

From playlist Talks

How NLP-Driven Literature Search Engine Helps Extracting COVID Information For Medical Innovation

Get your Free Spark NLP and Spark OCR Free Trial: https://www.johnsnowlabs.com/spark-nlp-try-free/ Register for NLP Summit 2021: https://www.nlpsummit.org/2021-events/ Watch all Healthcare NLP Summit 2021 sessions: https://www.nlpsummit.org/ With the growing risk of fast pace spread

From playlist Healthcare NLP Summit 2021

A Web-scale system for scientific knowledge exploration | AISC

Discussion Lead: Ramya Balasubramaniam Facilitators: Ehsan Amjadian , Karim Khayrat For more details including paper and slides, visit https://aisc.a-i.science/events/2019-05-02/

From playlist Machine Learning for Scientific Discovery

Design of Recommendation Systems

Recommender systems have a wide range of applications in the industry with movie, music, and product recommendations across top tech companies like Netflix, Spotify, Amazon, etc. Consumers on the web are increasingly relying on recommendations to purchase the next product on Amazon or watc

From playlist Advanced Machine Learning

Kaggle Livecoding: Data cleaning!🧹 | Kaggle

This week it's all about the data cleaning. We'll be taking a raw survey dataset & get it ready to be used for classification. About Kaggle: Kaggle is the world's largest community of data scientists. Join us to compete, collaborate, learn, and do your data science work. Kaggle's platform

From playlist Kaggle Live Coding | Kaggle

Introduction to Neural Re-Ranking

In this lecture we look at the workflow (including training and evaluation) of neural re-ranking models and some basic neural re-ranking architectures. Slides & transcripts are available at: https://github.com/sebastian-hofstaetter/teaching 📖 Check out Youtube's CC - we added our high qua

From playlist Advanced Information Retrieval 2021 - TU Wien

From playlist IR20 Machine Learning for IR

Stanford CS224N NLP with Deep Learning | Winter 2021 | Lecture 11 - Question Answering

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/2ZytY6G To learn more about this course visit: https://online.stanford.edu/courses/cs224n-natural-language-processing-deep-learning To follow along with the course

From playlist Stanford CS224N: Natural Language Processing with Deep Learning | Winter 2021

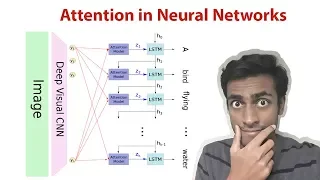

In this video, we discuss Attention in neural networks. We go through Soft and hard attention, discuss the architecture with examples. SUBSCRIBE to the channel for more awesome content! My video on Generative Adversarial Networks: https://www.youtube.com/watch?v=O8LAi6ksC80 My video on C

From playlist Deep Learning Research Papers

Scalar and vector fields | Lecture 11 | Vector Calculus for Engineers

Definition of a scalar and vector field. How to visualize a two-dimensional vector field. Join me on Coursera: https://www.coursera.org/learn/vector-calculus-engineers Lecture notes at http://www.math.ust.hk/~machas/vector-calculus-for-engineers.pdf Subscribe to my channel: http://www

From playlist Vector Calculus for Engineers