Solving Systems of Equations Using the Optimization Penalty Method

In this video we show how to solve a system of equations using numerical optimization instead of analytically solving. We show that this can be applied to either fully constrained or over constrained problems. In addition, this can be used to solve a system of equations that include both

From playlist Optimization

From playlist filter (less comfortable)

C34 Expanding this method to higher order linear differential equations

I this video I expand the method of the variation of parameters to higher-order (higher than two), linear ODE's.

From playlist Differential Equations

Converting Constrained Optimization to Unconstrained Optimization Using the Penalty Method

In this video we show how to convert a constrained optimization problem into an approximately equivalent unconstrained optimization problem using the penalty method. Topics and timestamps: 0:00 – Introduction 3:00 – Equality constrained only problem 12:50 – Reformulate as approximate unco

From playlist Optimization

Bistra Dilkina - Machine Learning for MIP Solving - IPAM at UCLA

Recorded 27 February 2023. Bistra Dilkina of the University of Southern California presents "Machine Learning for MIP Solving" at IPAM's Artificial Intelligence and Discrete Optimization Workshop. Learn more online at: http://www.ipam.ucla.edu/programs/workshops/artificial-intelligence-and

From playlist 2023 Artificial Intelligence and Discrete Optimization

Differential Equations with Forcing: Method of Variation of Parameters

This video solves externally forced linear differential equations with the method of variation of parameters. This approach is extremely powerful. The idea is to solve the unforced, or "homogeneous" system, and then to replace the unknown coefficients c_k with unknown functions of time c

From playlist Engineering Math: Differential Equations and Dynamical Systems

11_3_1 The Gradient of a Multivariable Function

Using the partial derivatives of a multivariable function to construct its gradient vector.

From playlist Advanced Calculus / Multivariable Calculus

Jonas Witt: Dantzig Wolfe Reformulations for the Stable Set Problem

Dantzig-Wolfe reformulation of an integer program convexifies a subset of the constraints, which yields an extended formulation with a potentially stronger linear programming (LP) relaxation than the original formulation. This paper is part of an endeavor to understand the strength of such

From playlist HIM Lectures: Trimester Program "Combinatorial Optimization"

Lecture 7 | Machine Learning (Stanford)

Help us caption and translate this video on Amara.org: http://www.amara.org/en/v/zJX/ Lecture by Professor Andrew Ng for Machine Learning (CS 229) in the Stanford Computer Science department. Professor Ng lectures on optimal margin classifiers, KKT conditions, and SUM duals. This cours

From playlist Lecture Collection | Machine Learning

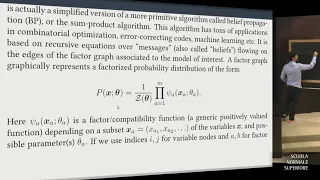

An SDCA-powered inexact dual augmented Lagrangian method(...) - Obozinski - Workshop 3 - CEB T1 2019

Guillaume Obozinski (Swiss Data Science Center) / 02.04.2019 An SDCA-powered inexact dual augmented Lagrangian method for fast CRF learning I'll present an efficient dual augmented Lagrangian formulation to learn conditional random field (CRF) models. The algorithm, which can be interpr

From playlist 2019 - T1 - The Mathematics of Imaging

12_2_1 Taylor Polynomials of Multivariable Functions

Now we expand the creation of a Taylor Polynomial to multivariable functions.

From playlist Advanced Calculus / Multivariable Calculus

Visualizing Data using t-SNE (algorithm) | AISC Foundational

Toronto Deep Learning Series, 1 November 2018 Paper Review: http://www.jmlr.org/papers/v9/vandermaaten08a.html Speaker: Sabyasachi Dasgupta (University of Toronto) Host: Statflo Date: Nov 1st, 2018 Visualizing Data using t-SNE We present a new technique called "t-SNE" that visualizes

From playlist Math and Foundations

Stanford Seminar - Designing to Empower Marginalized Communities through Social Technology

Alexandra To Northeastern University October 30, 2020 Technology frequently marginalizes people from underrepresented and vulnerable groups; more and more, we're learning how social media platforms, AI systems, machine learning algorithms, video games, etc., can enact, amplify, or perpetu

From playlist Stanford Seminars

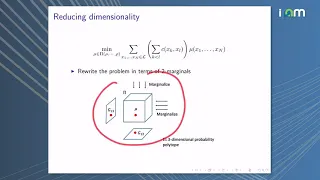

Lexing Ying: "Strictly-correlated Electron Functional and Multimarginal Optimal Transport"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop II: Tensor Network States and Applications "Strictly-correlated Electron Functional and Multimarginal Optimal Transport" Lexing Ying - Stanford University, Mathematics Abstract: We introduce methods

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Mathematical and Computational Aspects of Machine Learning - 10 October 2019

http://www.crm.sns.it/event/451/timetable.html#title 9:00- 10:00 Barbier, Jean Mean field theory of high-dimensional Bayesian inference 10:00- 10:30 Coffee break 10:30- 11:30 Peyré, Gabriel Optimal Transport for Data Science 11:30- 12:30 Peyré, Gabriel Optimal Transport for Data Scien

From playlist Centro di Ricerca Matematica Ennio De Giorgi

13_2 Optimization with Constraints

Here we use optimization with constraints put on a function whose minima or maxima we are seeking. This has practical value as can be seen by the examples used.

From playlist Advanced Calculus / Multivariable Calculus

Seffi Naor: Recent Results on Maximizing Submodular Functions

I will survey recent progress on submodular maximization, both constrained and unconstrained, and for both monotone and non-monotone submodular functions. The lecture was held within the framework of the Hausdorff Trimester Program: Combinatorial Optimization.

From playlist HIM Lectures 2015

Algorithmic Thresholds for Mean-Field Spin Glasses - Mark Sellke

Probability Seminar Topic: Algorithmic Thresholds for Mean-Field Spin Glasses Speaker: Mark Sellke Affiliation: Member, School of Mathematics Date: September 23, 2022 I will explain recent progress on computing approximate ground states of mean-field spin glass Hamiltonians, which are ce

From playlist Mathematics

Bistra Dilkina: "Decision-focused learning: integrating downstream combinatorics in ML"

Deep Learning and Combinatorial Optimization 2021 "Decision-focused learning: integrating downstream combinatorics in ML" Bistra Dilkina - University of Southern California (USC) Abstract: Closely integrating ML and discrete optimization provides key advantages in improving our ability t

From playlist Deep Learning and Combinatorial Optimization 2021

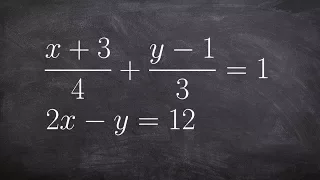

Solve a System of Equations Using Elimination with Fractions

👉Learn how to solve a system (of equations) by elimination. A system of equations is a set of equations which are collectively satisfied by one solution of the variables. The elimination method of solving a system of equations involves making the coefficient of one of the variables to be e

From playlist Solve a System of Equations Using Elimination | Hard