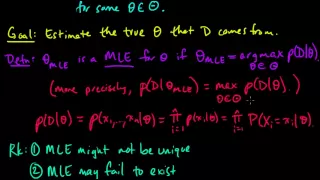

(ML 4.1) Maximum Likelihood Estimation (MLE) (part 1)

Definition of maximum likelihood estimates (MLEs), and a discussion of pros/cons. A playlist of these Machine Learning videos is available here: http://www.youtube.com/my_playlists?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

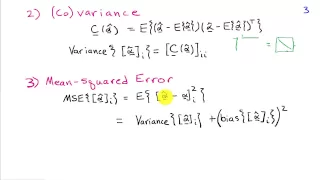

Introduction to Estimation Theory

http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. General notion of estimating a parameter and measures of estimation quality including bias, variance, and mean-squared error.

From playlist Estimation and Detection Theory

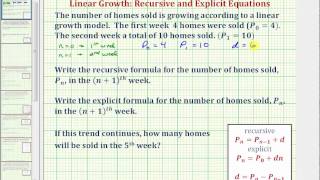

Ex: Write a Recursive and Explicit Equation to Model Linear Growth

This video provides an basic example of how to determine a recursive and explicit equation to model linear growth given P_0 and P_1. http://mathispower4u.com

From playlist Linear, Exponential, and Logistic Growth: Recursive/Explicit

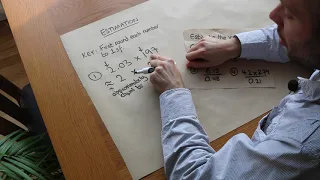

"Estimate the result of a calculation by first rounding each number."

From playlist Number: Rounding & Estimation

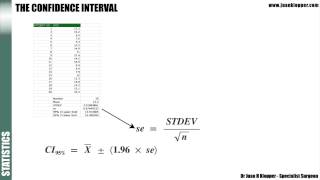

Statistics 5_1 Confidence Intervals

In this lecture explain the meaning of a confidence interval and look at the equation to calculate it.

From playlist Medical Statistics

Random and systematic error explained: from fizzics.org

In scientific experiments and measurement it is almost never possible to be absolutely accurate. We tend to make two types of error, these are either random or systematic. The video uses examples to explain the difference and the first steps you might take to reduce them. Notes to support

From playlist Units of measurement

Introduces notation and formulas for exponential growth models, with solutions to guided problems.

From playlist Discrete Math

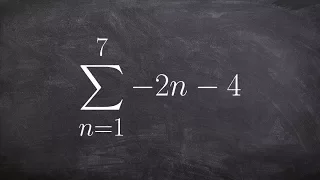

Learn to use summation notation for an arithmetic series to find the sum

👉 Learn how to find the partial sum of an arithmetic series. A series is the sum of the terms of a sequence. An arithmetic series is the sum of the terms of an arithmetic sequence. The formula for the sum of n terms of an arithmetic sequence is given by Sn = n/2 [2a + (n - 1)d], where a is

From playlist Series

Sequential Stopping for Parallel Monte Carlo by Peter W Glynn

PROGRAM: ADVANCES IN APPLIED PROBABILITY ORGANIZERS: Vivek Borkar, Sandeep Juneja, Kavita Ramanan, Devavrat Shah, and Piyush Srivastava DATE & TIME: 05 August 2019 to 17 August 2019 VENUE: Ramanujan Lecture Hall, ICTS Bangalore Applied probability has seen a revolutionary growth in resear

From playlist Advances in Applied Probability 2019

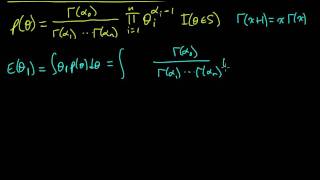

(ML 7.7.A2) Expectation of a Dirichlet random variable

How to compute the expected value of a Dirichlet distributed random variable.

From playlist Machine Learning

05-5 Inverse modeling : sequential importance re-sampling

Introduction to sequential importance resampling

From playlist QUSS GS 260

99 Data Analytics: Second Midterm Walk Through

I walk through my 2nd midterm from the Fall 2019.

From playlist Data Analytics and Geostatistics

NIPS 2011 Big Learning - Algorithms, Systems, & Tools Workshop: Fast Cross-Validation...

Big Learning Workshop: Algorithms, Systems, and Tools for Learning at Scale at NIPS 2011 Invited Talk: Fast Cross-Validation via Sequential Analysis by Tammo Kruger Abstract: With the increasing size of today's data sets, finding the right parameter configuration via cross-validatio

From playlist NIPS 2011 Big Learning: Algorithms, System & Tools Workshop

Lecture on the motivation for simulation vs. estimation and development of the sequential Gaussian simulation approach.

From playlist Data Analytics and Geostatistics

Felix Kwok: Analysis of a Three-Level Variant of Parareal

In this talk, we present a three-level variant of the parareal algorithm that uses three propagators at the fine, intermediate and coarsest levels. The fine and intermediate levels can both be run in parallel, only the coarsest level propagation is completely sequential. We interpret our a

From playlist Jean-Morlet Chair - Gander/Hubert

Aaditya Ramdas: Universal inference using the split likelihood ratio test

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 05, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

David O. Siegmund: Change: Detection,Estimation, Segmentation

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 08, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

David O. Siegmund: Change: Detection,Estimation, Segmentation

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 08, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

Stanford CS330: Multi-Task and Meta-Learning, 2019 | Lecture 6 - Reinforcement Learning Primer

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Assistant Professor Chelsea Finn, Stanford University http://cs330.stanford.edu/ 0:00 Introduction 0:46 Logistics 2:31 Why Reinforcement Learning? 3:37 The Pla

From playlist Stanford CS330: Deep Multi-Task and Meta Learning

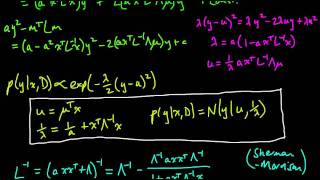

(ML 10.7) Predictive distribution for linear regression (part 4)

How to compute the (posterior) predictive distribution for a new point, under a Bayesian model for linear regression.

From playlist Machine Learning