(ML 19.2) Existence of Gaussian processes

Statement of the theorem on existence of Gaussian processes, and an explanation of what it is saying.

From playlist Machine Learning

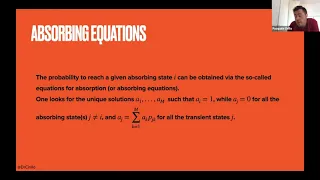

Brain Teasers: 10. Winning in a Markov chain

In this exercise we use the absorbing equations for Markov Chains, to solve a simple game between two players. The Zoom connection was not very stable, hence there are a few audio problems. Sorry.

From playlist Brain Teasers and Quant Interviews

(ML 11.4) Choosing a decision rule - Bayesian and frequentist

Choosing a decision rule, from Bayesian and frequentist perspectives. To make the problem well-defined from the frequentist perspective, some additional guiding principle is introduced such as unbiasedness, minimax, or invariance.

From playlist Machine Learning

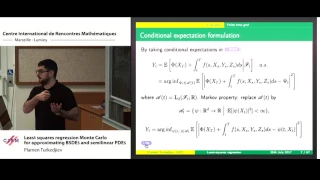

Plamen Turkedjiev: Least squares regression Monte Carlo for approximating BSDES and semilinear PDES

Abstract: In this lecture, we shall discuss the key steps involved in the use of least squares regression for approximating the solution to BSDEs. This includes how to obtain explicit error estimates, and how these error estimates can be used to tune the parameters of the numerical scheme

From playlist Probability and Statistics

Quentin Berthet: Learning with differentiable perturbed optimizers

Machine learning pipelines often rely on optimization procedures to make discrete decisions (e.g. sorting, picking closest neighbors, finding shortest paths or optimal matchings). Although these discrete decisions are easily computed in a forward manner, they cannot be used to modify model

From playlist Control Theory and Optimization

Intro to Markov Chains & Transition Diagrams

Markov Chains or Markov Processes are an extremely powerful tool from probability and statistics. They represent a statistical process that happens over and over again, where we try to predict the future state of a system. A markov process is one where the probability of the future ONLY de

From playlist Discrete Math (Full Course: Sets, Logic, Proofs, Probability, Graph Theory, etc)

We propose a sparse regression method capable of discovering the governing partial differential equation(s) of a given system by time series measurements in the spatial domain. The regression framework relies on sparsity promoting techniques to select the nonlinear and partial derivative

From playlist Research Abstracts from Brunton Lab

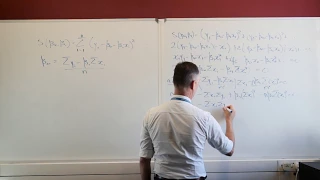

Least squares method for simple linear regression

In this video I show you how to derive the equations for the coefficients of the simple linear regression line. The least squares method for the simple linear regression line, requires the calculation of the intercept and the slope, commonly written as beta-sub-zero and beta-sub-one. Deriv

From playlist Machine learning

Olfactory Search and Navigation (Lecture 2) by Antonio Celani

PROGRAM ICTP-ICTS WINTER SCHOOL ON QUANTITATIVE SYSTEMS BIOLOGY (ONLINE) ORGANIZERS Vijaykumar Krishnamurthy (ICTS-TIFR, India), Venkatesh N. Murthy (Harvard University, USA), Sharad Ramanathan (Harvard University, USA), Sanjay Sane (NCBS-TIFR, India) and Vatsala Thirumalai (NCBS-TIFR, I

From playlist ICTP-ICTS Winter School on Quantitative Systems Biology (ONLINE)

Why Use Kalman Filters? | Understanding Kalman Filters, Part 1

Download our Kalman Filter Virtual Lab to practice linear and extended Kalman filter design of a pendulum system with interactive exercises and animations in MATLAB and Simulink: https://bit.ly/3g5AwyS Discover common uses of Kalman filters by walking through some examples. A Kalman filte

From playlist Understanding Kalman Filters

Reinforcement Learning 1: Introduction to Reinforcement Learning

Hado Van Hasselt, Research Scientist, shares an introduction reinforcement learning as part of the Advanced Deep Learning & Reinforcement Learning Lectures.

From playlist DeepMind x UCL | Reinforcement Learning Course 2018

Data Science - Part XIII - Hidden Markov Models

For downloadable versions of these lectures, please go to the following link: http://www.slideshare.net/DerekKane/presentations https://github.com/DerekKane/YouTube-Tutorials This lecture provides an overview on Markov processes and Hidden Markov Models. We will start off by going throug

From playlist Data Science

Stanford CS234: Reinforcement Learning | Winter 2019 | Lecture 1 - Introduction - Emma Brunskill

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Professor Emma Brunskill, Stanford University https://stanford.io/3eJW8yT Professor Emma Brunskill Assistant Professor, Computer Science Stanford AI for Human

From playlist Stanford CS234: Reinforcement Learning | Winter 2019

From playlist Contributed talks One World Symposium 2020

Reinforcement Learning 3: Markov Decision Processes and Dynamic Programming

Hado van Hasselt, Research scientist, discusses the Markov decision processes and dynamic programming as part of the Advanced Deep Learning & Reinforcement Learning Lectures.

From playlist DeepMind x UCL | Reinforcement Learning Course 2018

Sam Coogan, Georgia Tech Probabilistic guarantees for autonomous systems For complex autonomous systems subject to stochastic dynamics, providing absolute assurances of performance may not be possible. Instead, probabilistic guarantees that assure, for example, desirable performance with

From playlist Fall 2019 Kolchin Seminar in Differential Algebra

Stanford CS330: Multi-Task and Meta-Learning, 2019 | Lecture 6 - Reinforcement Learning Primer

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Assistant Professor Chelsea Finn, Stanford University http://cs330.stanford.edu/ 0:00 Introduction 0:46 Logistics 2:31 Why Reinforcement Learning? 3:37 The Pla

From playlist Stanford CS330: Deep Multi-Task and Meta Learning

Victor Panaretos: The extrapolation of correlation

CONFERENCE Recording during the thematic meeting : "Adaptive and High-Dimensional Spatio-Temporal Methods for Forecasting " the September 29, 2022 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks

From playlist Analysis and its Applications

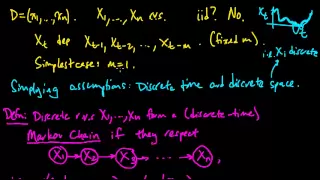

(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Lecture 02: Markov Decision Processes

Second lecture on the course "Reinforcement Learning" at Paderborn University during the summer term 2020. Source files are available here: https://github.com/upb-lea/reinforcement_learning_course_materials

From playlist Reinforcement Learning Course: Lectures (Summer 2020)