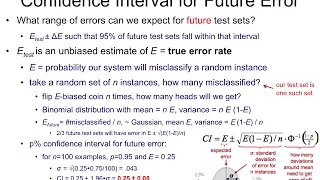

Gradient Boost Part 1 (of 4): Regression Main Ideas

Gradient Boost is one of the most popular Machine Learning algorithms in use. And get this, it's not that complicated! This video is the first part in a series that walks through it one step at a time. This video focuses on the main ideas behind using Gradient Boost to predict a continuous

From playlist StatQuest

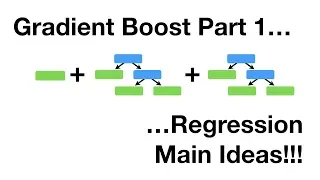

Lesson: Calculate a Confidence Interval for a Population Proportion

This lesson explains how to calculator a confidence interval for a population proportion.

From playlist Confidence Intervals

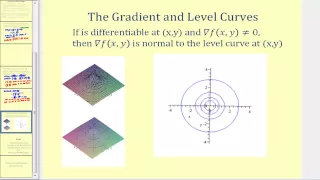

This video explains what information the gradient provides about a given function. http://mathispower4u.wordpress.com/

From playlist Functions of Several Variables - Calculus

Download the free PDF http://tinyurl.com/EngMathYT A basic tutorial on the gradient field of a function. We show how to compute the gradient; its geometric significance; and how it is used when computing the directional derivative. The gradient is a basic property of vector calculus. NOT

From playlist Engineering Mathematics

Gradient (1 of 3: Developing the formula)

More resources available at www.misterwootube.com

From playlist Further Linear Relationships

Rohitash Chandra - BERT-based language models for US Elections, COVID-19, and analysis

Dr Rohitash Chandra (UNSW Sydney) presents "BERT-based language models for US Elections, COVID-19, and analysis of the translations of the Bhagavad Gita", 26 November 2021.

From playlist Statistics Across Campuses

Cecilia Clementi: "Learning molecular models from simulation and experimental data"

Machine Learning for Physics and the Physics of Learning 2019 Workshop II: Interpretable Learning in Physical Sciences "Learning molecular models from simulation and experimental data" Cecilia Clementi - Rice University Institute for Pure and Applied Mathematics, UCLA October 14, 2019 F

From playlist Machine Learning for Physics and the Physics of Learning 2019

Example on gradient identities for functions of two variables.

From playlist Engineering Mathematics

Bao Wang: "Momentum in Stochastic Gradient Descent and Deep Neural Nets"

Deep Learning and Medical Applications 2020 "Momentum in Stochastic Gradient Descent and Deep Neural Nets" Bao Wang - University of California, Los Angeles (UCLA), Mathematics Abstract: Stochastic gradient-based optimization algorithms play perhaps the most important role in modern machi

From playlist Deep Learning and Medical Applications 2020

Rafael Gómez-Bombarelli: "Coarse graining autoencoders and evolutionary learning of atomistic..."

Machine Learning for Physics and the Physics of Learning 2019 Workshop I: From Passive to Active: Generative and Reinforcement Learning with Physics "Coarse graining autoencoders and evolutionary learning of atomistic potentials" Rafael Gomez-Bombarelli, Massachusetts Institute of Technol

From playlist Machine Learning for Physics and the Physics of Learning 2019

Finding The Gradient Of A Straight Line | Graphs | Maths | FuseSchool

The gradient of a line tells us how steep the line is. Lines going in this / direction have positive gradients, and lines going in this \ direction have negative gradients. The gradient can be found by finding how much the line goes up - the rise, and dividing it by how much the line goe

From playlist MATHS

Lightning Talks - Chi Jin, Lin Yang, Alec Koppel, Karan Singh, Nataly Brukhim

Workshop on New Directions in Reinforcement Learning and Control Topic:Lightning Talks Speaker: Chi Jin, Lin Yang, Alec Koppel, Karan Singh, Nataly Brukhim Date: November 8, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

DeepMind x UCL RL Lecture Series - Exploration & Control [2/13]

Research Scientist Hado van Hasselt looks at why it's important for learning agents to balance exploring and exploiting acquired knowledge at the same time. Slides: https://dpmd.ai/explorationcontrol Full video lecture series: https://dpmd.ai/DeepMindxUCL21

From playlist Learning resources

Efficient Exploration in Bayesian Optimization – Optimism and Beyond by Andreas Krause

A Google TechTalk, presented by Andreas Krause, 2021/06/07 ABSTRACT: A central challenge in Bayesian Optimization and related tasks is the exploration—exploitation dilemma: Selecting inputs that are informative about the unknown function, while focusing exploration where we expect high ret

From playlist Google BayesOpt Speaker Series 2021-2022

Emilie Chouzenoux - Deep Unfolding of a Proximal Interior Point Method for Image Restoration

Variational methods have started to be widely applied to ill-posed inverse problems since they have the ability to embed prior knowledge about the solution. However, the level of performance of these methods significantly depends on a set of parameters, which can be estimated through compu

From playlist Journée statistique & informatique pour la science des données à Paris-Saclay 2021

What is Gradient, and Gradient Given Two Points

"Find the gradient of a line given two points."

From playlist Algebra: Straight Line Graphs