Light and Optics 1_3 Introduction to Reflection

Reflection from plane and spherical mirrors.

From playlist Physics - Light and Optics

Physics 11.1.2a - Image Formation

Image formation in a plane mirror

From playlist Physics - Reflection and Refraction

Alessandro Chiodo - Towards a global mirror symmetry (Part 1)

Mirror symmetry is a phenomenon which inspired fundamental progress in a wide range of disciplines in mathematics and physics in the last twenty years; we will review here a number of results going from the enumerative geometry of curves to homological algebra. These advances justify the i

From playlist École d’été 2011 - Modules de courbes et théorie de Gromov-Witten

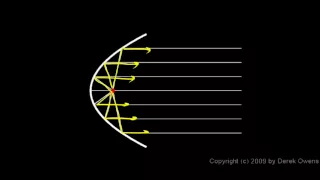

Physics 11.1.3a - Spherical and Parabolic Mirrors

Spherical and Parabolic mirrors. From the Physics course by Derek Owens. The distance learning class is available at www.derekowens.com

From playlist Physics - Reflection and Refraction

Light and Optics 1_2 Introduction to Reflection

Reflection form plane and spherical mirrors

From playlist Physics - Light and Optics

reaLD 3D glasses filter with a linear polarising filter

This is for a post on my blog: http://blog.stevemould.com

From playlist Everything in chronological order

Subscribe here: https://www.youtube.com/channel/UC7HtImwPgmb1axsTJE5lxeA?sub_confirmation=1

From playlist Optics

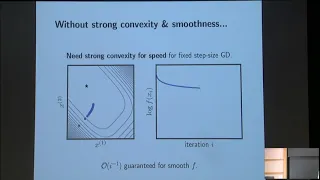

Relative Smoothness: Progress, Mysteries, and Hamiltonians - Christopher Maddison

Short talks by postdoctoral members Topic: Relative Smoothness: Progress, Mysteries, and Hamiltonians Speaker: Christopher Maddison Affiliation: Member, School of Mathematics Date: October 1, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

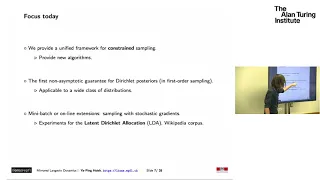

Mirrored Langevin Dynamics - Ya-Ping Hsieh

The workshop aims at bringing together researchers working on the theoretical foundations of learning, with an emphasis on methods at the intersection of statistics, probability and optimization. We consider the posterior sampling problem in constrained distributions, such as the Latent

From playlist The Interplay between Statistics and Optimization in Learning

Accelerated stochastic gradient ..first-order optimization - Zeyuan Allen-Zhu

Topic: Accelerated stochastic gradient descent via new model for first-order optimization Speaker: Zeyuan Allen-Zhu, Member, School of Mathematics More videos on http://video.ias.edu

From playlist Mathematics

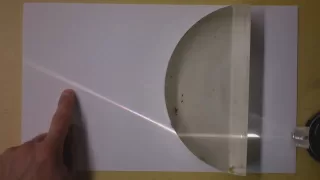

Geometric Optics Intuition with Mirrors and Lenses Concave Convex Diverging Converging | Doc Physics

This video has it all. Seriously, all of it. But no math, and no ray tracing. But maybe you just want to understand. Who can blame you? Just look at the light in that thumbnail bending away from the normal to the surface as it exits the plastic. All of geometric optics with lenses st

From playlist Demos 25. The Reflection of Light: Mirrors

Sebastian Pokutta: A distributed accelerated algorithm for the 1-fair packing problem

The proportional fair resource allocation problem is a major problem studied in flow control of networks, operations research, and economic theory, where it has found numerous applications. This problem, defined as the constrained maximization of ∑_i log x_i, is known as the packing propor

From playlist Workshop: Continuous approaches to discrete optimization

Babak Hassibi: "Implicit and Explicit Regularization in Deep Neural Networks"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop IV: Efficient Tensor Representations for Learning and Computational Complexity "Implicit and Explicit Regularization in Deep Neural Networks" Babak Hassibi - California Institute of Technology Abstra

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Francisco Criado: The dual 1-fair packing problem and applications to linear programming

Proportional fairness (also known as 1-fairness) is a fairness scheme for the resource allocation problem introduced by Nash in 1950. Under this scheme, an allocation for two players is unfair if a small transfer of resources between two players results in a proportional increase in the ut

From playlist Workshop: Tropical geometry and the geometry of linear programming

Light and Optics 1_6 Introduction to Reflection

Reflection. Solved problems.

From playlist Physics - Light and Optics

Set Chasing, with an application to online shortest path - Sébastien Bubeck

Computer Science/Discrete Mathematics Seminar I Topic: Set Chasing, with an application to online shortest path Speaker: Sébastien Bubeck Affiliation: Microsoft Research Lab - Redmond Date: April 18, 2022 Since the late 19th century, mathematicians have realized the importance and genera

From playlist Mathematics

From playlist Contributed talks One World Symposium 2020

Edouard Pauwels: Curiosities and counterexamples in smooth convex optimization

CONFERENCE Recording during the thematic meeting : "Learning and Optimization in Luminy" the October 4, 2022 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on C

From playlist Control Theory and Optimization

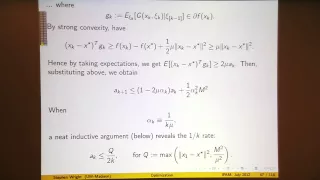

Stephen Wright: "Some Relevant Topics in Optimization, Pt. 2"

Graduate Summer School 2012: Deep Learning Feature Learning "Some Relevant Topics in Optimization, Pt. 2" Stephen Wright, University of Wisconsin-Madison Institute for Pure and Applied Mathematics, UCLA July 16, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-school

From playlist GSS2012: Deep Learning, Feature Learning