Coding theory | Information theory | Finite fields

Linear network coding

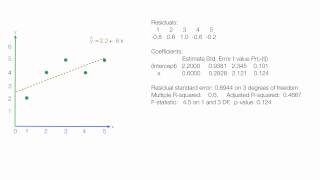

In computer networking, linear network coding is a program in which intermediate nodes transmit data from source nodes to sink nodes by means of linear combinations. Linear network coding may be used to improve a network's throughput, efficiency, and scalability, as well as reducing attacks and eavesdropping. The nodes of a network take several packets and combine for transmission. This process may be used to attain the maximum possible information flow in a network. It has been proven that, theoretically, linear coding is enough to achieve the upper bound in multicast problems with one source. However linear coding is not sufficient in general; even for more general versions of linearity such as convolutional coding and . Finding optimal coding solutions for general network problems with arbitrary demands remains an open problem. (Wikipedia).