Determine the Kernel of a Linear Transformation Given a Matrix (R3, x to 0)

This video explains how to determine the kernel of a linear transformation.

From playlist Kernel and Image of Linear Transformation

Find the Kernel of a Matrix Transformation (Give Direction Vector)

This video explains how to determine direction vector a line that represents for the kernel of a matrix transformation

From playlist Kernel and Image of Linear Transformation

Concept Check: Describe the Kernel of a Linear Transformation (Reflection Across y-axis)

This video explains how to describe the kernel of a linear transformation that is a reflection across the y-axis.

From playlist Kernel and Image of Linear Transformation

Determine a Basis for the Kernel of a Matrix Transformation (3 by 4)

This video explains how to determine a basis for the kernel of a matrix transformation.

From playlist Kernel and Image of Linear Transformation

Introduction to the Kernel and Image of a Linear Transformation

This video introduced the topics of kernel and image of a linear transformation.

From playlist Kernel and Image of Linear Transformation

Lecture 6: Gauge-equivariant Mesh CNN - Pim de Haan

Video recording of the First Italian School on Geometric Deep Learning held in Pescara in July 2022. Slide: https://www.sci.unich.it/geodeep2022/slides/2022-07-27%20Mesh%20-%20First%20Italian%20GDL%20School.pdf

From playlist First Italian School on Geometric Deep Learning - Pescara 2022

Stanford CS229: Machine Learning | Summer 2019 | Lecture 23 - Course Recap and Wrap Up

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3B6WitS Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

Concept Check: Describe the Kernel of a Linear Transformation (Projection onto y=x)

This video explains how to describe the kernel of a linear transformation that is a projection onto the line y = x.

From playlist Kernel and Image of Linear Transformation

Deep Networks Are Kernel Machines (Paper Explained)

#deeplearning #kernels #neuralnetworks Full Title: Every Model Learned by Gradient Descent Is Approximately a Kernel Machine Deep Neural Networks are often said to discover useful representations of the data. However, this paper challenges this prevailing view and suggest that rather tha

From playlist Papers Explained

Support Vector Machines Part 3: The Radial (RBF) Kernel (Part 3 of 3)

Support Vector Machines use kernel functions to do all the hard work and this StatQuest dives deep into one of the most popular: The Radial (RBF) Kernel. We talk about the parameter values, how they calculate high-dimensional coordinates and then we'll figure out, step-by-step, how the Rad

From playlist Support Vector Machines

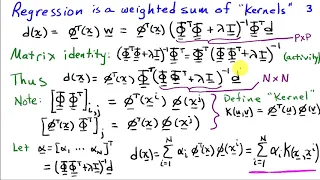

The Kernel Trick - THE MATH YOU SHOULD KNOW!

Some parametric methods, like polynomial regression and Support Vector Machines stand out as being very versatile. This is due to a concept called "Kernelization". In this video, we are going to kernelize linear regression. And show how they can be incorporated in other Algorithms to solv

From playlist The Math You Should Know

Efficient an effective calibration of spatio-temporal models: Dan Williamson & James Salter, Exeter

Uncertainty quantification (UQ) employs theoretical, numerical and computational tools to characterise uncertainty. It is increasingly becoming a relevant tool to gain a better understanding of physical systems and to make better decisions under uncertainty. Realistic physical systems are

From playlist Effective and efficient gaussian processes

Linear Transformers Are Secretly Fast Weight Memory Systems (Machine Learning Paper Explained)

#fastweights #deeplearning #transformers Transformers are dominating Deep Learning, but their quadratic memory and compute requirements make them expensive to train and hard to use. Many papers have attempted to linearize the core module: the attention mechanism, using kernels - for examp

From playlist Papers Explained

14: Rate Models and Perceptrons - Intro to Neural Computation

MIT 9.40 Introduction to Neural Computation, Spring 2018 Instructor: Michale Fee View the complete course: https://ocw.mit.edu/9-40S18 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP61I4aI5T6OaFfRK2gihjiMm Explores a mathematically tractable model of neural networks, r

From playlist MIT 9.40 Introduction to Neural Computation, Spring 2018

Structured prediction via implicit embeddings - Rudi - Workshop 3 - CEB T1 2019

Alessandro Rudi (INRIA) / 01.04.2019 Structured prediction via implicit embeddings. In this talk we analyze a regularization approach for structured prediction problems. We characterize a large class of loss functions that allows to naturally embed structured outputs in a linear space.

From playlist 2019 - T1 - The Mathematics of Imaging

For the latest information, please visit: http://www.wolfram.com Speakers: Sebastian Bodenstein & Giorgia Fortuna Wolfram developers and colleagues discussed the latest in innovative technologies for cloud computing, interactive deployment, mobile devices, and more.

From playlist Wolfram Technology Conference 2016

Linear Transformation: Which Vectors are in the Range of T and the Kernel of T?

This video explains how to determine if a given vector in the range / image and the kernel of linear transformation.

From playlist Kernel and Image of Linear Transformation

Deep Learning and Computations of PDEs by Siddhartha Mishra

COLLOQUIUM DEEP LEARNING AND COMPUTATIONS OF PDES SPEAKER: Siddhartha Mishra (Professor of Applied Mathematics, ETH Zürich, Switzerland) DATE & TIME: Mon, 27 June 2022, 15:30 to 17:00 VENUE: Online Colloquium ABSTRACT Partial Differential Equations (PDEs) are ubiquitous in the scien

From playlist ICTS Colloquia

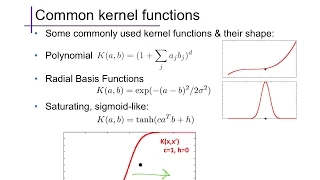

Support Vector Machines (3): Kernels

The kernel trick in the SVM dual; examples of kernels; kernel form for least-squares regression

From playlist cs273a