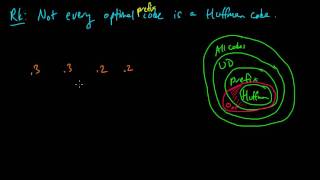

(IC 4.13) Not every optimal prefix code is Huffman

Every Huffman code is an optimal prefix code, but the converse is not true. This is illustrated with an example. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

(IC 4.9) Optimality of Huffman codes (part 4) - extension and contraction

We prove that Huffman codes are optimal. In part 4, we define the H-extension and H-contraction. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

(IC 4.7) Optimality of Huffman codes (part 2) - weak siblings

We prove that Huffman codes are optimal. In part 2, we show that there exists an optimal prefix code with a pair of "weak siblings". A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

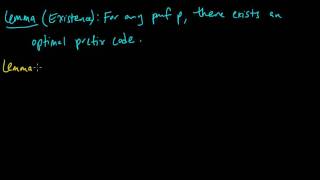

(IC 4.12) Optimality of Huffman codes (part 7) - existence

We prove that Huffman codes are optimal. In part 7, we show that there exists an optimal code for any given p. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

And we finally got huffman encoding working with Julia. Some minor changes to be made still. -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

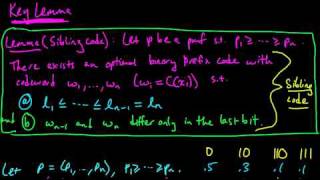

(IC 4.8) Optimality of Huffman codes (part 3) - sibling codes

We prove that Huffman codes are optimal. In part 3, we show that there exists a "sibling code". A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

Well, we floundered around today (as usual). Got the decoder working, though. That was nice. -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

Alright, finally putting Huffman Encoding into the Algorithm Archive! -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

Alright, didn't get as far as I wanted, but we got something up and running. -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

Everything You Need to Know About JPEG - Episode 4 Part 1: Huffman Decoding

In this series you will learn all of the in-depth details of the complex and sophisticated JPEG image compression format In this episode, we learn all about Huffman codes, how to create a Huffman Coding Tree, and how to create Huffman codes based on a JPEG Huffman Table Jump into the pla

From playlist Fourier

Live CEOing Ep 253: Reviewing Entries in the Wolfram Function Repository

Watch Stephen Wolfram and teams of developers in a live, working, language design meeting. This episode is about Reviewing Entries in the Wolfram Function Repository.

From playlist Behind the Scenes in Real-Life Software Design

(IC 4.6) Optimality of Huffman codes (part 1) - inverse ordering

We prove that Huffman codes are optimal. In part 1, we show that the lengths are inversely ordered with the probabilities. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

Week 8: Wednesday - CS50 2007 - Harvard University

Huffman coding. Preprocessing. Compiling. Assembling. Linking. CPUs. Ant-8.

From playlist CS50 Lectures 2007

Order, Entropy, Information, and Compression (Lecture 2) by Dov Levine

PROGRAM ENTROPY, INFORMATION AND ORDER IN SOFT MATTER ORGANIZERS: Bulbul Chakraborty, Pinaki Chaudhuri, Chandan Dasgupta, Marjolein Dijkstra, Smarajit Karmakar, Vijaykumar Krishnamurthy, Jorge Kurchan, Madan Rao, Srikanth Sastry and Francesco Sciortino DATE: 27 August 2018 to 02 Novemb

From playlist Entropy, Information and Order in Soft Matter

Woo! Spent the whole day learning about priority queues in julia! -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

Making some huffman diagrams for my next video! -- Watch live at https://www.twitch.tv/simuleios

From playlist Huffman forest

Order, Entropy, Information, and Compression (Lecture 1) by Dov Levine

PROGRAM ENTROPY, INFORMATION AND ORDER IN SOFT MATTER ORGANIZERS: Bulbul Chakraborty, Pinaki Chaudhuri, Chandan Dasgupta, Marjolein Dijkstra, Smarajit Karmakar, Vijaykumar Krishnamurthy, Jorge Kurchan, Madan Rao, Srikanth Sastry and Francesco Sciortino DATE: 27 August 2018 to 02 Novemb

From playlist Entropy, Information and Order in Soft Matter

(IC 4.10) Optimality of Huffman codes (part 5) - extension lemma

We prove that Huffman codes are optimal. In part 5, we show that the H-extension of an optimal code is optimal. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

(IC 4.11) Optimality of Huffman codes (part 6) - induction

We prove that Huffman codes are optimal. In part 6, we complete the proof by induction. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding