How to find the line that best fits points, including when you want to weigh some points less than others. Regression line. Least-squares regression. Note: There's a typo at the end of the video: You also have to premultiply b by your matrix with 1/2 Check out my Orthogonality playlist:

From playlist Orthogonality

How to Make Predictions in Regression Analysis

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys How to Make Predictions in Regression Analysis

From playlist Statistics

Least squares method for simple linear regression

In this video I show you how to derive the equations for the coefficients of the simple linear regression line. The least squares method for the simple linear regression line, requires the calculation of the intercept and the slope, commonly written as beta-sub-zero and beta-sub-one. Deriv

From playlist Machine learning

[Machine Learning] Linear Regression using Least Square Error, Gradient Descent

Short Tutorial to understand Linear Regression. This explains linear regression with least square error, gradient decent, cost function and objective function. all machine learning youtube videos from me, https://www.youtube.com/playlist?list=PLVNY1HnUlO26x597OgAN8TCgGTiE-38D6

From playlist Machine Learning

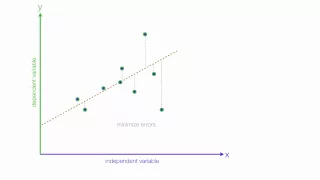

An Introduction to Linear Regression Analysis

Tutorial introducing the idea of linear regression analysis and the least square method. Typically used in a statistics class. Playlist on Linear Regression http://www.youtube.com/course?list=ECF596A4043DBEAE9C Like us on: http://www.facebook.com/PartyMoreStudyLess Created by David Lon

From playlist Linear Regression.

what is linear and non linear in machine learning, deep learning

what is linear and non linear in machine learning and deep learning? you will have clear understanding after watching this video. all machine learning youtube videos from me, https://www.youtube.com/playlist?list=PLVNY1HnUlO26x597OgAN8TCgGTiE-38D6

From playlist Machine Learning

Kalman filtering - Lakshmivarahan

PROGRAM: Data Assimilation Research Program Venue: Centre for Applicable Mathematics-TIFR and Indian Institute of Science Dates: 04 - 23 July, 2011 DESCRIPTION: Data assimilation (DA) is a powerful and versatile method for combining observational data of a system with its dynamical mod

From playlist Data Assimilation Research Program

Deep Learning Lecture 2.4 - Statistical Estimator Theory

Deep Learning Lecture - Estimator Theory 3: - Statistical Estimator Theory - Bias, Variance and Noise - Results for Linear Least Square Regression

From playlist Deep Learning Lecture

Linear regression is used to compare sets or pairs of numerical data points. We use it to find a correlation between variables.

From playlist Learning medical statistics with python and Jupyter notebooks

Average Treatment Effects: Confounding

Professor Stefan Wager on confounding and regression adjustments. Comparison of regression adjustments done via OLS versus generic machine learning.

From playlist Machine Learning & Causal Inference: A Short Course

How to calculate linear regression using least square method

An example of how to calculate linear regression line using least squares. A step by step tutorial showing how to develop a linear regression equation. Use of colors and animations. Like us on: http://www.facebook.com/PartyMoreStudyLess Related Videos Playlist on Regression http://www.y

From playlist Linear Regression.

Applied Machine Learning: Secret Sauce

Professor Jann Spiess shares the secret sauce of applied machine learning.

From playlist Machine Learning & Causal Inference: A Short Course

Simple Linear Regression (Part C)

Regression Analysis by Dr. Soumen Maity,Department of Mathematics,IIT Kharagpur.For more details on NPTEL visit http://nptel.ac.in

From playlist IIT Kharagpur: Regression Analysis | CosmoLearning.org Mathematics

Multiple Linear Regression (Part D)

Regression Analysis by Dr. Soumen Maity,Department of Mathematics,IIT Kharagpur.For more details on NPTEL visit http://nptel.ac.in

From playlist IIT Kharagpur: Regression Analysis | CosmoLearning.org Mathematics

Cross Validation in Scikit Learn

This is the big one. We go over cross validation and other techniques to split your data. VERY IMPORTANT. We talk about cross validated scoring and prediction and then we talk about scikit learn cross validation iterators: K-fold, stratified fold, grouped data, and time series split. Asso

From playlist A Bit of Data Science and Scikit Learn

Lecture 9A : Overview of ways to improve generalization

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 9A : Overview of ways to improve generalization

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 9.1 — Overview of ways to improve generalization [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Determining if equations are linear - Free Math Videos - Online Tutor

👉 Learn how to determine if an equation is a linear equation. A linear equation is an equation whose highest exponent on its variable(s) is 1. The variables do not have negative or fractional, or exponents other than one. Variables must not be in the denominator of any rational term and c

From playlist Write Linear Equations